The Historical Dictionary of Switzerland (HDS) is an academic reference work which documents the most important topics and objects of Swiss history from prehistory up to the present.

The HDS digital edition comprises about 36.000 articles organized in 4 main headword groups:

- Biographies,

- Families,

- Geographical entities and

- Thematical contributions.

Beyond the encyclopaedic description of entities/concepts, each article contains references to primary and secondary sources which supported authors when writing articles.

Data

Goals

Our projects revolve around linking the HDS to external data and aim at:

-

Entity linking towards HDS

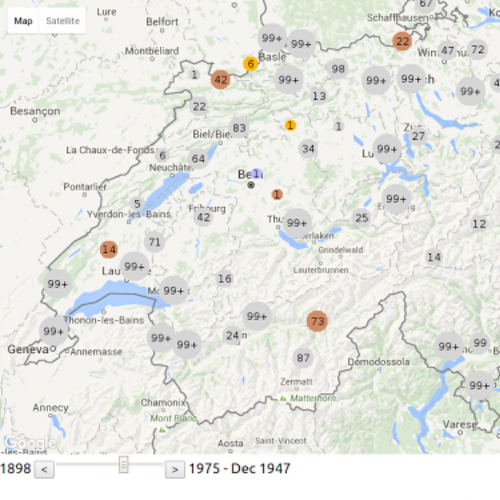

The objective is to link named entity mentions discovered in historical Swiss newspapers to their correspondant HDS articles.

-

Exploring reference citation of HDS articles

The objective is to reconcile HDS bibliographic data contained in articles with SwissBib.

Named Entity Recognition

We used web-services to annotate text with named entities:

- Dandelion

- Alchemy

- OpenCalais

Named entity mentions (persons and places) are matched against entity labels of HDS entries and directly linked when only one HDS entry exists.

Further developments would includes:

- handling name variants, e.g. 'W.A. Mozart' or 'Mozart' should match 'Wolfgang Amadeus Mozart' .

- real disambiguation by comparing the newspaper article context with the HDS article context (a first simple similarity could be tf-idf based)

- working with a more refined NER output which comprises information about name components (first, middle,last names)

Bibliographic enrichment

We work on the list of references in all articles of the HDS, with three goals:

-

Finding all the sources which are cited in the HDS (several sources are cited multiple times) ;

-

Link all the sources with the SwissBib catalog, if possible ;

-

Interactively explore the citation network of the HDS.

The dataset comes from the HDS metadata. It contains lists of references in every HDS article:

Result of source disambiguation and look-up into SwissBib:

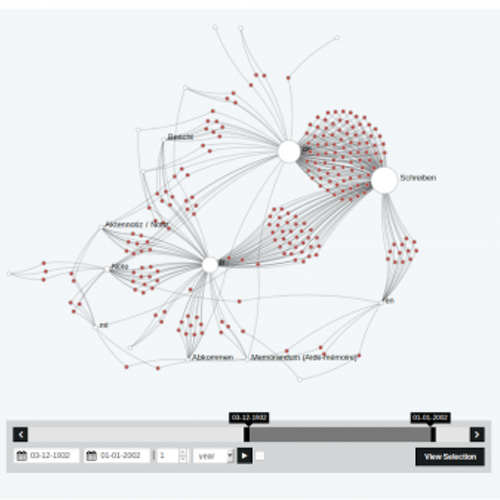

Bibliographic coupling network of the HDS articles (giant component). In Bibliographic coupling two articles are connected if they cite the same source at least once.

Biographies (white), Places (green), Families (blue) and Topics (red):

Ci-citation network of the HDS sources (giant component of degree > 15). In co-citation networks, two sources are connected if they are cited by one or more articles together.

Publications (white), Works of the subject of an article (green), Archival sources (cyan) and Critical editions (grey):

Team