Interview with Beat Estermann, Project Coordinator Open Cultural Hackathon 2016

Open Cultural Data Hackathon 2016

The second Swiss Open Cultural Data Hackathon took place on 1-2 July 2016 at the Basel University Library. It was organised by the OpenGLAM.ch working group in cooperation with the Basel University Library, infoclio.ch and other partners.

A complete list of Hackathons projects as well as a list of available datasets can be found on the Open Glam Hackathon website.

Beat Estermann

Felix Winter

Interview mit Felix Winter, Vice-Director University Library Basel

Oleg Lavrovky

Interview mit Oleg Lavrovky, Opendata.ch

Animation in the spirit of dada poetry

The computer produces animations. Dada! All your artworks are belong to us. We build the parameters, you watch the animations. Words, images, collide together into ever changing, non-random, pseudo-random, deliberately unpredictable tensile moments of social media amusement. Yay!

The computer produces animations. Dada! All your artworks are belong to us. We build the parameters, you watch the animations. Words, images, collide together into ever changing, non-random, pseudo-random, deliberately unpredictable tensile moments of social media amusement. Yay!

For this first prototype we used Walter Serner’s Text “Letzte Lockerung – Manifest Dada” as a source. This text is consequently reconfigured, rewritten and thereby reinterpreted by the means of machine learning using “char-rnm”.

Images in the public domain snatched out of the collection “Wandervögel” from the Schweizerische Sozialarchiv.

Data

-

Images are taken from the collection Wandervögel from the Schweizerische Sozialarchiv

-

Text Walter Serner Letzte Lockerung - Manifest Dada

-

Tool char-rnn

Team

Performing Arts Ontology

The goal of the project is to develop an ontology for the performing arts domain that allows to describe the holdings of the Swiss Archives for the Performing Arts (formerly Swiss Theatre Collection and Swiss Dance Collection) and other performing arts related holdings, such as the holdings represented on the Performing Arts Portal of the Specialized Information Services for the Performing Arts (Goethe University Frankfurt).

The goal of the project is to develop an ontology for the performing arts domain that allows to describe the holdings of the Swiss Archives for the Performing Arts (formerly Swiss Theatre Collection and Swiss Dance Collection) and other performing arts related holdings, such as the holdings represented on the Performing Arts Portal of the Specialized Information Services for the Performing Arts (Goethe University Frankfurt).

See also: Project "Linked Open Theatre Data"

Data

-

Data from the www.performing-arts.eu Portal

Project Outputs

2017:

-

Draft Version of the Swiss Performing Arts Ontology (Pre-Release, 24 May 2017)

2016:

-

Initial Data Modelling Experiments: Swiss Performing Arts Vocabulary (deprecated)

Resources

Team

-

Christian Schneeberger

-

Birk Weiberg

-

René Vielgut (2016)

-

Julia Beck

-

Adrian Gschwend

Kamusi Project: Every Word in Every Language

We are developing many resources built around linguistic data, for languages around the world.

We are developing many resources built around linguistic data, for languages around the world. At the Cultural Data hackathon, we are hoping for help with:

-

An application for translating museum exhibit information and signs in other public spaces (zoos, parks, etc) to multiple languages, so visitors can understand the exhibit no matter where they come from. We currently have a prototype for a similar application for restaurants.

-

Cultural datasets: we are looking for multilingual lexicons that we can incorporate into our db, to enhance the general vocabulary that we have for many languages.

-

UI design improvements. We have software, but it's not necessarily pretty. We need the eyes of designers to improve the user experience.

Data

-

List and link your actual and ideal data sources.

Team

Historical Dictionary of Switzerland Out of the Box

The Historical Dictionary of Switzerland (HDS) is an academic reference work which documents the most important topics and objects of Swiss history from prehistory up to the present.

The Historical Dictionary of Switzerland (HDS) is an academic reference work which documents the most important topics and objects of Swiss history from prehistory up to the present.

The HDS digital edition comprises about 36.000 articles organized in 4 main headword groups:

- Biographies,

- Families,

- Geographical entities and

- Thematical contributions.

Beyond the encyclopaedic description of entities/concepts, each article contains references to primary and secondary sources which supported authors when writing articles.

Data

We have the following data:

* metadata information about HDS articles Historical Dictionary of Switzerland comprising:

-

bibliographic references of HDS articles

-

article titles

* Le Temps digital archive for the year 1914

Goals

Our projects revolve around linking the HDS to external data and aim at:

-

Entity linking towards HDS

The objective is to link named entity mentions discovered in historical Swiss newspapers to their correspondant HDS articles.

-

Exploring reference citation of HDS articles

The objective is to reconcile HDS bibliographic data contained in articles with SwissBib.

Named Entity Recognition

We used web-services to annotate text with named entities:

- Dandelion

- Alchemy

- OpenCalais

Named entity mentions (persons and places) are matched against entity labels of HDS entries and directly linked when only one HDS entry exists.

Further developments would includes:

- handling name variants, e.g. 'W.A. Mozart' or 'Mozart' should match 'Wolfgang Amadeus Mozart' .

- real disambiguation by comparing the newspaper article context with the HDS article context (a first simple similarity could be tf-idf based)

- working with a more refined NER output which comprises information about name components (first, middle,last names)

Bibliographic enrichment

We work on the list of references in all articles of the HDS, with three goals:

-

Finding all the sources which are cited in the HDS (several sources are cited multiple times) ;

-

Link all the sources with the SwissBib catalog, if possible ;

-

Interactively explore the citation network of the HDS.

The dataset comes from the HDS metadata. It contains lists of references in every HDS article:

Result of source disambiguation and look-up into SwissBib:

Bibliographic coupling network of the HDS articles (giant component). In Bibliographic coupling two articles are connected if they cite the same source at least once.

Biographies (white), Places (green), Families (blue) and Topics (red):

Ci-citation network of the HDS sources (giant component of degree > 15). In co-citation networks, two sources are connected if they are cited by one or more articles together.

Publications (white), Works of the subject of an article (green), Archival sources (cyan) and Critical editions (grey):

Team

- odi

- julochrobak

- and other team members

Visualize Relationships in Authority Datasets

Some figures

-

original MACS dataset: 36.3MB

-

'wrangled' MACS dataset: 171MB

-

344134 nodes in the dataset

-

some of our laptops have difficulties to handle visualization of more than 4000 nodes :(

Raw MACS data:

Transforming data.

Visualizing MACS data with Neo4j:

Visualization showing 300 respectively 1500 relationships:

Visualization showing 3000 relationships. For an exploration of the relations you find a high-res picture here graph_3000_relations.png (10.3MB)

Please show me the shortest path between “Rechtslehre” und “Ernährung”:

Some figures

-

original MACS dataset: 36.3MB

-

'wrangled' MACS dataset: 171MB

-

344134 nodes in the dataset

-

some of our laptops have difficulties to handle visualization of more than 4000 nodes :(

Datasets

-

MACS - Multilingual Access to Subjects http://www.dnb.de/DE/Wir/Kooperation/MACS/macs_node.html

-

GND - Gemeinsame Normdatei http://www.dnb.de/DE/Standardisierung/GND/gnd_node.html

Process

-

get data

-

transform data (e.g. with “Metafactor”)

-

load data in graph database (e.g. “Neo4j”)

*its not as easy as it sounds

Team

-

Günter Hipler

-

Silvia Witzig

-

Sebastian Schüpbach

-

Sonja Gasser

-

Jacqueline Martinelli

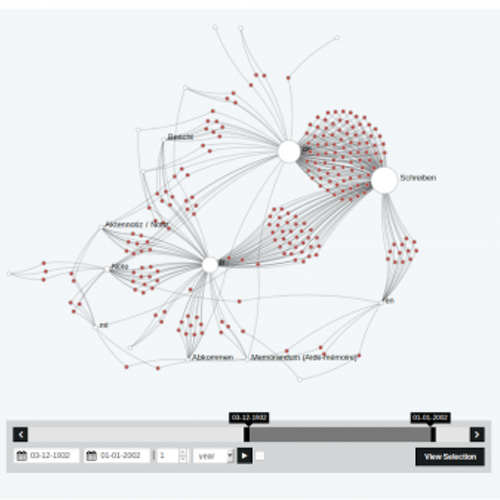

Dodis Goes Hackathon

Wir arbeiten mit den Daten zu den Dokumenten von 1848-1975 aus der Datenbank Dodis und nutzen hierfür Nodegoat.

Wir arbeiten mit den Daten zu den Dokumenten von 1848-1975 aus der Datenbank Dodis und nutzen hierfür Nodegoat.

Animation (mit click öffnen):

Data

Team

-

Christof Arnosti

-

Amandine Cabrio

-

Lena Heizmann

-

Christiane Sibille

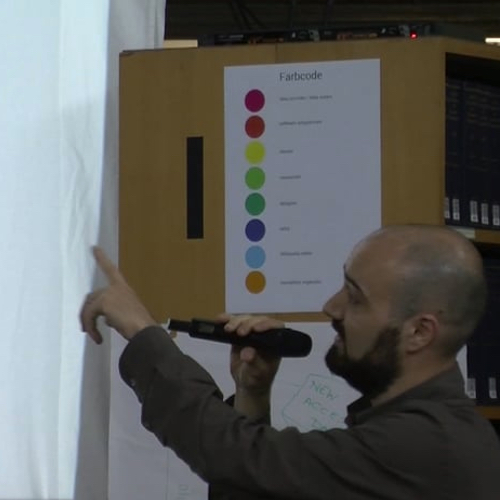

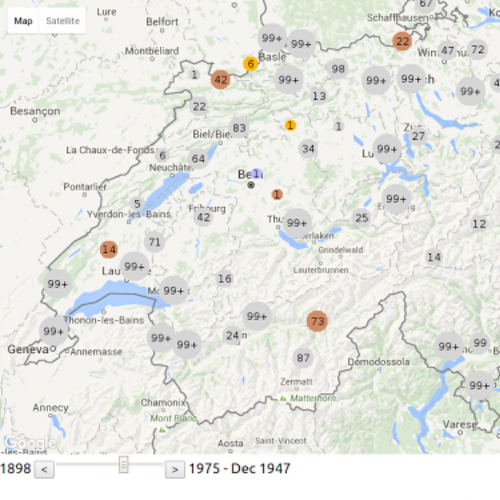

VSJF-Refugees Migration 1898-1975 in Switzerland

We developed an interactive visualization of the migration flow of (mostly jewish) refugees migrating to or through Switzerland between 1898-1975. We used the API of google maps to show the movement of about 20'000 refugees situated in 535 locations in Switzerland.

We developed an interactive visualization of the migration flow of (mostly jewish) refugees migrating to or through Switzerland between 1898-1975. We used the API of google maps to show the movement of about 20'000 refugees situated in 535 locations in Switzerland.

One of the major steps in the development of the visualization was to clean the data, as the migration route is given in an unstructured way. Further, we had to overcame technical challenges such as moving thousands of marks on a map all at once.

The journey of a refugee starts with the place of birth and continues with the place from where Switzerland was entered (if known). Then a series of stays within Switzerland is given. On average a refugee visited 1 to 2 camps or homes. Finally, the refugee leaves Switzerland from a designated place to a destination abroad. Due to missing information some of the dates had to be estimated, especially for the date of leave where only 60% have a date entry.

The movements of all refugees can be traced over time in the period of 1898-1975 (based on the entry date). The residences in Switzerland are of different types and range from poor conditions as in prison camps to good conditions as in recovery camps. We introduced a color code to display different groups of camps and homes: imprisoned (red), interned (orange), labour (brown), medical (green), minors (blue), general (grey), unknown (white).

As additional information, to give the dots on the map a face, we researched famous people who stayed in Switzerland in the same time period. Further, historical information were included to connect the movements of refugees to historical events.

Data

Code

Team

-

Bourdic Maïwenn

-

Noyer Frédéric

-

Tutav Yasemin

-

Züger Marlies

Manesse Gammon

The Codex Manesse, or «Große Heidelberger Liederhandschrift», is an outstanding source of Middle High German Minnesang, a book of songs and poetry the main body of which was written and illustrated between 1250 and 1300 in Zürich, Switzerland. The Codex, produced for the Manesse family, is one of the most beautifully illustrated German manuscripts in history.

The Codex Manesse, or «Große Heidelberger Liederhandschrift», is an outstanding source of Middle High German Minnesang, a book of songs and poetry the main body of which was written and illustrated between 1250 and 1300 in Zürich, Switzerland. The Codex, produced for the Manesse family, is one of the most beautifully illustrated German manuscripts in history.

The Codex Manesse is an anthology of the works of about 135 Minnesingers of the mid 12th to early 14th century. For each poet, a portrait is shown, followed by the text of their works. The entries are ordered by the social status of the poets, starting with the Holy Roman Emperor Heinrich VI, the kings Konrad IV and Wenzeslaus II, down through dukes, counts and knights, to the commoners.

Page 262v, entitled «Herr Gœli», is named after a member of the Golin family originating from Badenweiler, Germany. «Herr Gœli» may have been identified either as Konrad Golin (or his nephew Diethelm) who were high ranked clergymen in 13th century Basel. The illustration, which is followed by four songs, shows «Herrn Gœli» and a friend playing a game of Backgammon (at that time referred to as «Pasch», «Puff», «Tricktrack», or «Wurfzabel»). The game is in full swing, and the players argue about a specific move.

Join in and start playing a game of backgammon against «Herrn Gœli». But watch out: «Herr Gœli» speaks Middle High German, and despite his respectable age he is quite lucky with the dice!

Instructions

You control the white stones. The object of the game is to move all your pieces from the top-left corner of the board clockwise to the bottom-left corner and then off the board, while your opponent does the same in the opposite direction. Click on the dice to roll, click on a stone to select it, and again on a game space to move it. Each die result tells you how far you can move one piece, so if you roll a five and a three, you can move one piece five spaces, and another three spaces. Or, you can move the same piece three, then five spaces (or vice versa). Rolling doubles allows you to make four moves instead of two.

Note that you can't move to spaces occupied by two or more of your opponent's pieces, and a single piece without at least another ally is vulnerable to being captured. Therefore it's important to try to keep two or more of your pieces on a space at any time. The strategy comes from attempting to block or capture your opponent's pieces while advancing your own quickly enough to clear the board first.

And don't worry if you don't understand what «Herr Gœli» ist telling you in Middle High German: Point your mouse to his message to get a translation into modern English.

Updates

2016/07/01 v1.0: Index page, basic game engine

2016/07/02 v1.1: Translation into Middle High German, responsive design

2016/07/04 v1.11: Minor bug fixes

Data

-

Universitätsbibliothek Heidelberg: Der Codex Manesse und die Entdeckung der Liebe

-

Universitätsbibliothek Heidelberg: Große Heidelberger Liederhandschrift – Cod. Pal. germ. 848 (Codex Manesse)

-

Wikimedia Commons: Codex Manesse

-

Historisches Lexikon der Schweiz: Manessische Handschrift

-

Historisches Lexikon der Schweiz: Baden, von

-

Wörterbuchnetz: Mittelhochdeutsches Handwörterbuch von Matthias Lexer

Author

-

Prof. Thomas Weibel, Thomas Weibel Multi & Media

Historical maps

The group discussed the usage of historical maps and geodata using the Wikimedia environment. Out of the discussion we decided to work on 4 learning items and one small hack.

The group discussed the usage of historical maps and geodata using the Wikimedia environment. Out of the discussion we decided to work on 4 learning items and one small hack.

The learning items are:

-

The workflow for historical maps - the Wikimaps workflow

-

Wikidata 101

-

Public artworks database in Sweden - using Wikidata for storing the data

-

Mapping maps metadata to Wikidata: Transitioning from the map template to storing map metadata in Wikidata. Sum of all maps?

The small hack is:

-

Creating a 3D gaming environment in Cities Skylines based on data from a historical map.

Data

This hack is based on an experimentation to map a demolished and rebuilt part of Helsinki. In DHH16, a Digital Humanities hackathon in Helsinki this May, the goal was to create a historical street view. The source aerial image was georeferenced with Wikimaps Warper, traced with OpenHistoricalMap, historical maps from the Finna aggregator were georeferenced with the help of the Geosetter program and finally uploaded to Mapillary for the final street view experiment.

The Small Hack - Results

Our goal has been to recreate the historical area of Helsinki in a modern game engine provided by Cities: Skylines (Developer: Colossal Order). This game provides a built-in map editor which is able to read heightmaps (DEM) to rearrange the terrain according to it. Though there are some limits to it: The heightmap has to have an exact size of 1081x1081px in order to be able to be translated to the game's terrain.

To integrate streets and railways into the landscape, we tried to use an already existing modification for Cities: Skylines which can be found in the Steam Workshop: Cimtographer by emf. Given the coordinates of the bounding box for the terrain, it is supposed to read out the geometrical information of OpenStreetMap. A working solution would be amazing, as one would not only be able to read out information of OSM, but also from OpenHistoricalMap, thus being able to recreate historical places. Unfortunately, the algorithm is not working that properly - though we were able to create and document some amazing “street art”.

Another potential way of how to recreate the structure of the cities might be to create an aerial image overlay and redraw the streets and houses manually. Of course, this would mean an enormous manual work.

Further work can be done regarding the actual buildings. Cities: Skylines provides the opportunity to mod the original meshes and textures to bring in your very own structures. It might be possible to create historical buildings as well. However, one has to think about the proper resolution of this work. It might also be an interesting task to figure out how to create low resolution meshes out of historical images.

Team

-

Susanna Ånäs

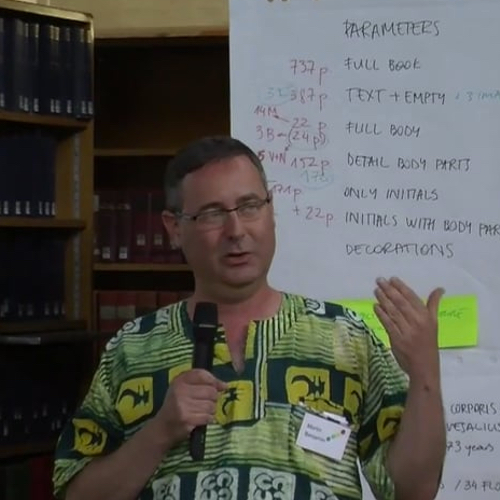

Visual Exploration of Vesalius' Fabrica

We are using cultural data of a rare copy of DE HUMANI CORPORIS FABRICA combined with a quantitive and qualitative analysis of visual content. This all is done without reading a single text page. That means that we are trying to create a content independent visual analysis method, which would enable a public to have an overview and quick insight.

Screenshots of the prototype

Description

We are using cultural data of a rare copy of DE HUMANI CORPORIS FABRICA combined with a quantitive and qualitative analysis of visual content. This all is done without reading a single text page. That means that we are trying to create a content independent visual analysis method, which would enable a public to have an overview and quick insight.

Process

Clickable Prototype

Some Gifs

Data

Team

-

Radu Suciu

-

Nicole Lachenmeier, www.yaay.ch

-

Indre Grumbinaite, www.yaay.ch

-

Danilo Wanner, www.yaay.ch

-

Darjan Hil, www.yaay.ch

-

Vlad Atanasiu

Sprichort

sprichort is an application which lets users travel in time. The basis are a map and historical photographs like Spelterini's photographs from his voyages to Egypt, across the Alps or to Russia. To each historical photograph comes an image of what it looks like now. So people can see change. The photographs are complemented with stories from people about this places and literary descriptions of this places.

sprichort is an application which lets users travel in time. The basis are a map and historical photographs like Spelterini's photographs from his voyages to Egypt, across the Alps or to Russia. To each historical photograph comes an image of what it looks like now. So people can see change. The photographs are complemented with stories from people about this places and literary descriptions of this places.

An important aspect is participation. Users should be able to upload their own hostorical photographs and they should be able to provide actual photos to historical photographs.

The User Interface of the application:

Web

Data

Team

-

Ricardo Joss

-

Daisy Larios

-

Jia Chyi, Wang

-

Sabrina Montimurro

SFA-Metadata (swiss federal state archives) at EEXCESS

The goal of our „hack“ is to reuse existing search and visualization tools for dedicated datasets provided by the swiss federal state archives.

The goal of our „hack“ is to reuse existing search and visualization tools for dedicated datasets provided by the swiss federal state archives.

The project EEXCESS (EU funded research project, www.eexcess.eu) has the vision to unfold the treasure of cultural, educational and scientific long-tail content for the benefit of all users. In this context the project realized different software components to connect databases, providing means for both automated (recommender engine) and active search queries and a bunch of visualization tools to access the results.

The federal swiss state archives hosts a huge variety of digitized objects. We aim at realizing a dedicated connection of that data with the EEXCESS infrastructure (using a google chrome extension) and thus find out, whether different ways of visualizations can support intuitive access to the data (e.g. Creation of virtual landscapes from socia-economic data, browse through historical photographs by using timelines or maps etc.).

Let's keep fingers crossed …![]()

Data

Team

-

Louis Gantner

-

Daniel Hess

-

Marco Majoleth

-

André Ourednik

-

Jörg Schlötterer

#GlamHackClip2016

Short clip edited to document the GLAMHack 2016, featuring short interviews with hackathon participants that we recorded on site and additional material from the Open Cultural Data Sets made available for the hackathon.

Short clip edited to document the GLAMHack 2016, featuring short interviews with hackathon participants that we recorded on site and additional material from the Open Cultural Data Sets made available for the hackathon.

Data

Music - Public Domain Music Recordings and Metadata / Swiss Foundation Public Domain

- Amilcare Ponchielli (1834-1886), La Gioconda, Dance of the hours (part 2), recorded in Manchester, 29. Juli 1941

- Joseph Haydn, Trumpet Concerto in E Flat, recorded on the 19. Juni 1946

Aerial Photographs by Eduard Spelterini / Swiss National Library

- Eduard Spelterini, Basel between 1893 and 1923. See picture.

- Eduard Spelterini, Basel between 1893 and 1902. See picture.

Bilder 1945-1973 / Dodis

- 1945: Ankunft jüdischer Flüchtlinge

- 1945: Flüchtlinge an der Grenze

Team

-

Jan Baumann (infoclio.ch)

-

Enrico Natale (infoclio.ch)