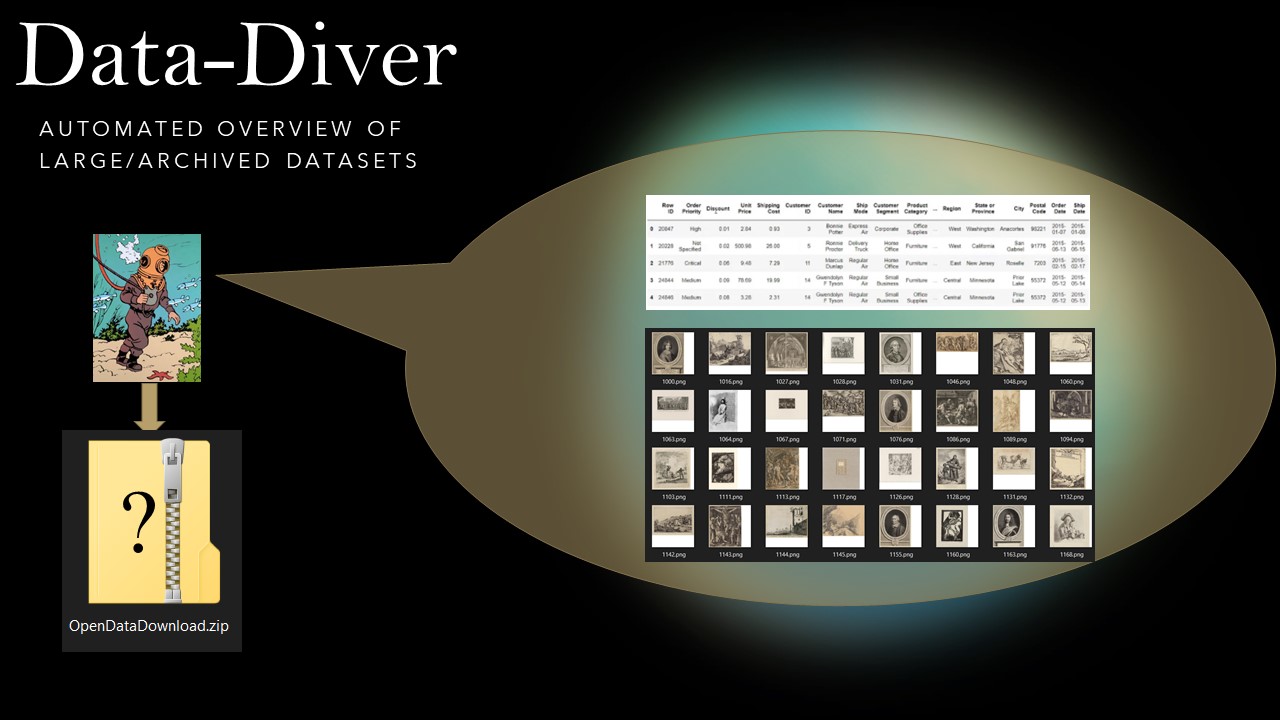

An insight into the GLAMhack2022 by Alessandro Plantera, and

Hackathons

GLAMhack 2022

Alain und Laura sind neue Medien

Two comedians take a poetic journey along selected cultural datasets. They uncover trivia, funny and bizarre facts, and give life to data in a most peculiar way. In French, German, English and some Italian.

Data

- http://www.openstreetmap.org

- http://make.opendata.ch/wiki/data:glam_ch#pcp_inventory

- http://make.opendata.ch/wiki/data:glam_ch#carl_durheim_s_police_photogra...

- http://make.opendata.ch/wiki/data:glam_ch#swiss_book_2001

Team

- Catherine Pugin

- Laura Chaignat

- Alain Guerry

- Dominique Genoud

- Florian Evéquoz

Links

Tools: Knime, Python, D3, sweat, coffee and salmon pasta

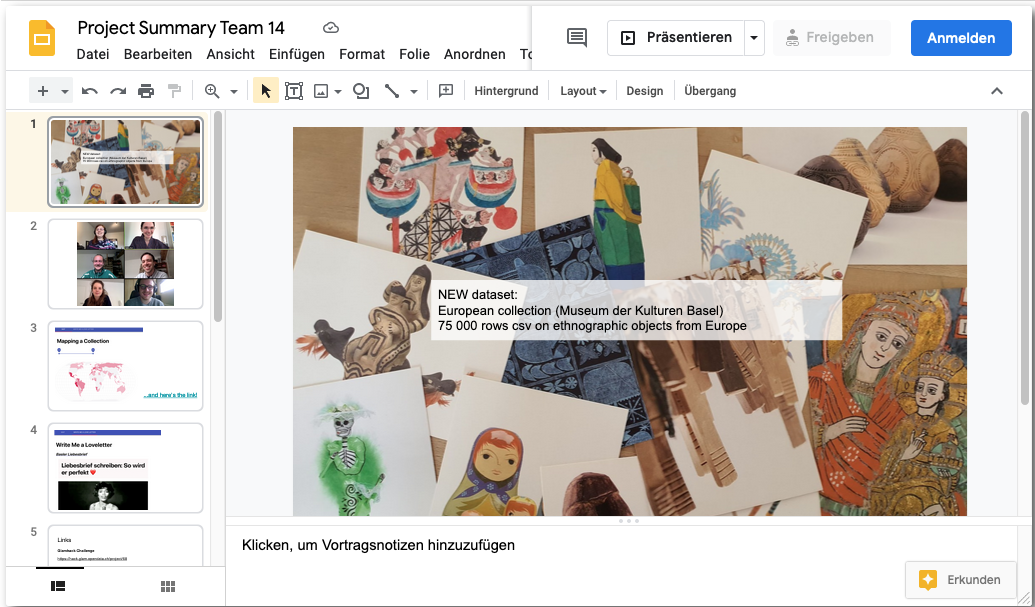

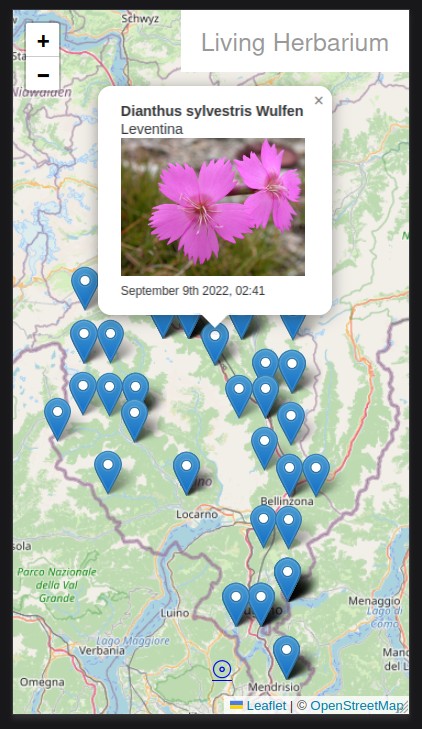

Artmap

Simple webpage created to display the art on the map and allow to click on each individual element and to follow the URL link:

Find art around you on a map!

Data

Team

- odi

- julochrobak

- and other team members

catexport

Export Wikimedia Commons categories to your local machine with a convenient web user interface.

Try it here: http://catexport.herokuapp.com

Data

- Wikimedia Commons API

Team

- odi

- and other team members

Related

At #glamhack a number of categories were made available offline by loleg using this shell script and magnus-toolserver.

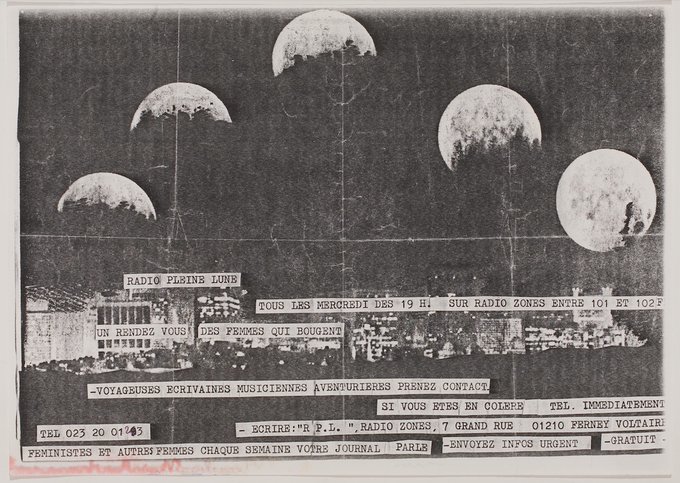

Cultural Music Radio

This is a cultural music radio which plays suitable music depending on your gps location or travel route. Our backend server looks for artists and musicians nearby the user's location and sends back an array of Spotify music tracks which will then be played on the iOS app.

Server Backend

We use a python server backend to process RESTful API requests. Clients can send their gps location and will receive a list of Spotify tracks which have a connection to this location (e.g. the artist / musician come from there).

Javascript Web Frontend

We provide a javascript web fronted so the services can be used from any browser. The gps location is determined via html5 location services.

iOS App

In our iOS App we get the user's location and send it to our python backend. We then receive a list of Spotify tracks which will be then played via Spotify iOS SDK.

There is also a map available which shows where the user is currently located and what is around him.

We offer a nicely designed user interface which allows users to quickly switch between music and map view to discover both environment and music! ![]()

Data and APIs

-

MusicBrainz for linking locations to artists and music tracks

-

Spotify for music streaming in the iOS app (account required)

Team

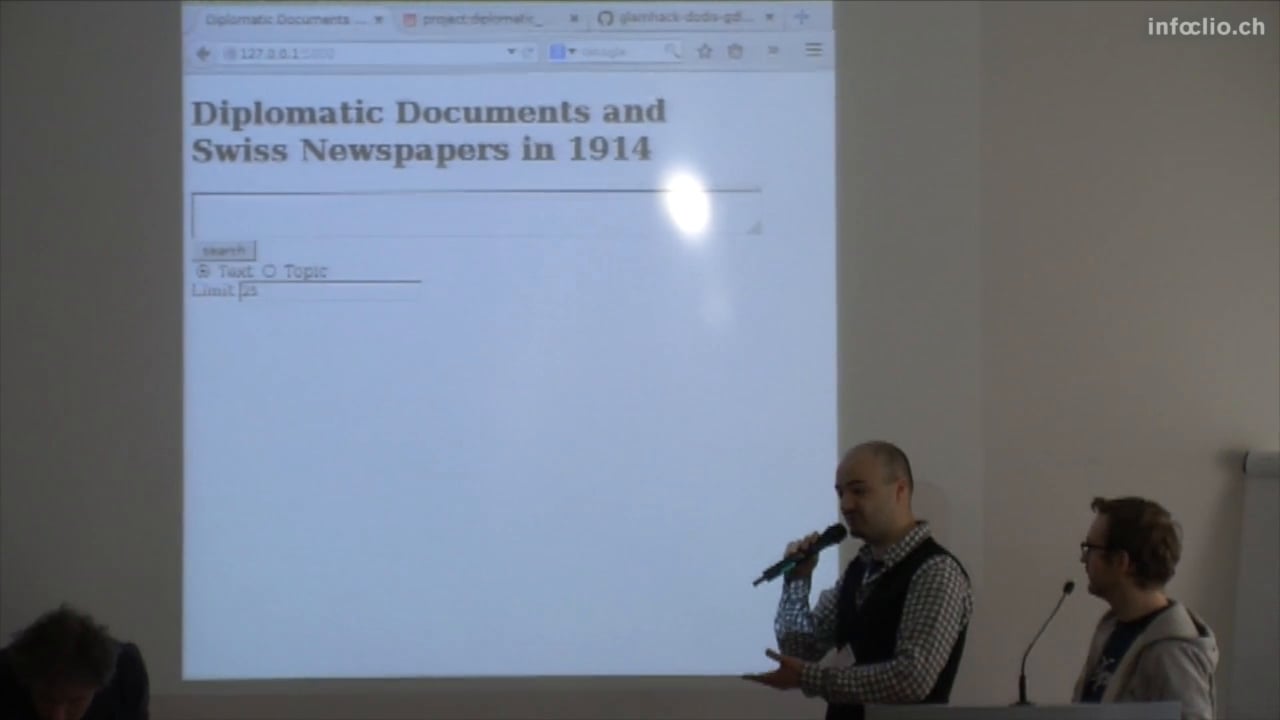

Diplomatic Documents and Swiss Newspapers in 1914

This project gathers two data sets: Diplomatic Documents of Switzerland and Le Temps Historical Archive for year 1914. Our aim is to find links between the two data sets to allow users to more easily search the corpora together. The project is composed by two parts:

- The Geographical Browser of the corpora. We extract all places from Dodis metadata and all places mentioned in each article of Le Temps, we then match documents and articles that refer to the same places and visualise them on a map for geographical browsing.

- The Text similarity search of the corpora. We train two models on the Le Temps corpus: Term Frequency Inverse Document Frequency and Latent Semantic Indexing with 25 topics. We then develop a web interface for text similarity search over the corpus and test it with Dodis summaries and full text documents.

Data and source code

-

Data.zip (CC BY 4.0) (DropBox)

Documentation

In this project, we want to connect newspaper articles from Journal de Genève (a Genevan daily newspaper) and the Gazette de Lausanne to a sample of the Diplomatic Documents in Switzerland database (Dodis). The goal is to conduct requests in the Dodis descriptive metadata to look for what appears in a given interval of time in the press by comparing occurrences from both data sets. Thus, we should be able to examine if and how the written press reflected what was happening at the diplomatic level. The time interval for this project is the summer of 1914.

In this context, at first we cleaned the data, for example by removing noise caused by short strings of characters and stopwords. The cleansing is a necessary step to reduce noise in the corpus. We then compared prepared tfidf vectors of words and LSI topics and represented each article in the corpus as such. Finally, we indexed the whole corpus of Le Temps to prepare it for similarity queries. THe last step was to build an interface to search the corpus by entering a text (e.g., a Dodis summary).

Difficulties were not only technical. For example, the data are massive: we started doing this work on fifteen days, then on three months. Moreover, some Dodis documents were classified (i.e. non public) at the time, therefore some of the decisions don't appear in the newspapers articles. We also used the TXM software, a platform for lexicometry and text statistical analysis, to explore both text corpora (the DODIS metadata and the newspapers) and to detect frequencies of significant words and their presence in both corpora.

Dodis Map

Team

Graphing the Stateless People in Carl Durheim's Photos

CH-BAR has contributed 222 photos by Carl Durheim, taken in 1852 and 1853, to Wikimedia Commons. These pictures show people who were in prison in Bern for being transients and vagabonds, what we would call travellers today. Many of them were Yenish. At the time the state was cracking down on people who led a non-settled lifestyle, and the pictures (together with the subjects' aliases, jobs and other information) were intended to help keep track of these “criminals”.

Since the photos' metadata includes details of the relationships between the travellers, I want to try graphing their family trees. I also wonder if we can find anything out by comparing the stated places of origin of different family members.

I plan to make a small interactive webapp where users can click through the social graph between the travellers, seeing their pictures and information as they go.

I would also like to find out more about these people from other sources … of course, since they were officially stateless, they are unlikely to have easily-discoverable certificates of birth, death and marriage.

-

Source code: downloads photographs from Wikimedia, parses metadata and creates a Neo4j graph database of people, relationships and places

Data

- Category:Durheim portraits contributed by CH-BAR on Wikimedia

Team

Links

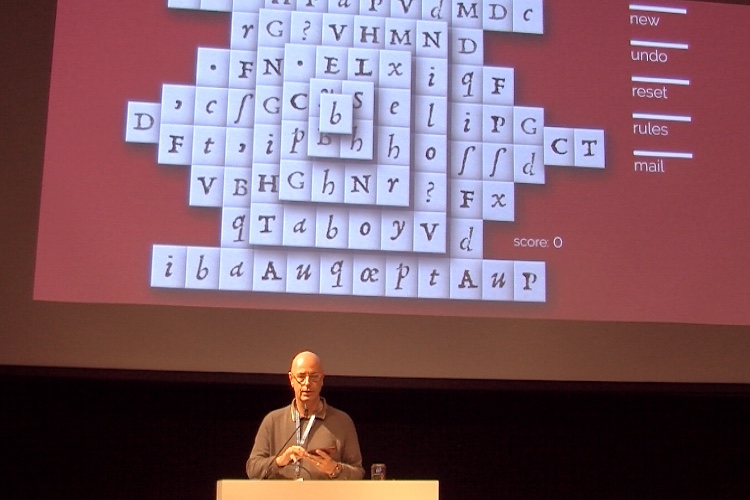

Historical Tarot Freecell

Historical playing cards are witnesses of the past, icons of the social and economic reality of their time. On display in museums or stored in archives, ancient playing cards are no longer what they once were meant to be: a deck of cards made for playful entertainment. This project aims at making historical playing cards playable again by means of the well-known solitaire card game "Freecell".

Tarot Freecell is a fully playable solitaire card game coded in HTML 5. It offers random setup, autoplay, reset and undo options. The game features a historical 78-card deck used for games and divination. The cards were printed in the 1880s by J. Müller & Cie., Schaffhausen, Switzerland.

Tarot Freecell is a fully playable solitaire card game coded in HTML 5. It offers random setup, autoplay, reset and undo options. The game features a historical 78-card deck used for games and divination. The cards were printed in the 1880s by J. Müller & Cie., Schaffhausen, Switzerland.

The cards need to be held upright and use Roman numeral indexing. The lack of modern features like point symmetry and Arabic numerals made the deck increasingly unpopular.

Due to the lack of corner indices - a core feature of modern playing cards - the vertical card offset needs to be significantly higher than in other computer adaptations.

Instructions

Cards are dealt out with their faces up into 8 columns until all 52 cards are dealt. The cards overlap but you can see what cards are lying underneath. On the upper left side there is space for 4 cards as a temporary holding place during the game (i.e. the «free cells»). On the upper right there is space for 4 stacks of ascending cards to begin with the aces of each suit (i.e. the «foundation row»).

Look for the aces of the 4 suits – swords, sticks, cups and coins. As soon as the aces are free (which means that there are no more cards lying on top of them) they will flip to the foundation row. Play the cards between the columns by creating lines of cards in descending order, alternating between swords/sticks and cups/coins. For example, you can place a swords nine onto a coins ten, or a cups jack onto a sticks queen.

Placing cards onto free cells (1 card per cell only) will give you access to other cards in the columns. Look for the lower numbers of each suit and move cards to gain access to them. You can move entire stacks, the number of cards moveable at a time is limited to the number of free cells (and empty stacks) plus one.

The game strategy comes from moving cards to the foundations as soon as possible. Try to increase the foundations evenly, so you have cards to use in the columns. If «Auto» is switched on, cards no other card can be placed on will automatically flip to the foundations.

You win the game when all 8 columns are sorted in descending order. All remaining cards will then flip to the foundations, from ace to king in each suit.

Updates

2015/02/27 v1.0: Basic game engine

2015/02/28 v1.1: Help option offering modern suit and value indices in the upper left corner

2015/03/21 v1.1: Retina resolution and responsive design

Data

- Wikipedia: Tarot 1JJ

- Wikimedia Commons: Tarot 1JJ card set

Author

- Prof. Thomas Weibel, Thomas Weibel Multi & Media

Historical Views of Zurich Data Upload

Preparation of approx. 200 historical photographs and pictures of the Baugeschichtliches Archiv Zurich for upload unto Wikimedia Commons. Metadata enhancing, adding landmarks and photographers as additional category metadata

Team

- Micha Rieser

- Reto Wick

- wild

- Marco Sieber

- Martin E. Walder

- and other team members

Lausanne Historic GeoGuesser

http://hackathon2015.cruncher.ch/

A basic GeoGuesser game using pictures of Lausanne from the 19th century. All images are available on http://musees.lausanne.ch/ and are are part of the Musée Historique de Lausanne Collection.

Data

Team

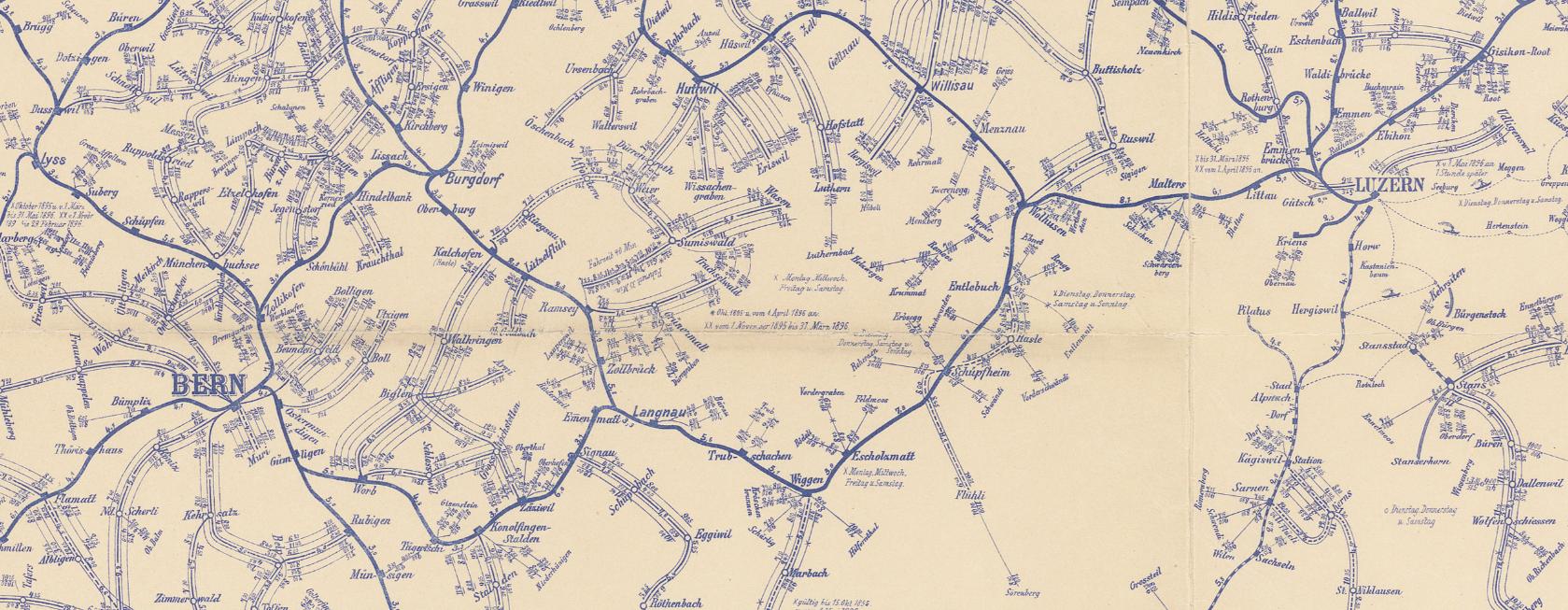

Oldmaps online

screenshot: map from Ryhiner collection in oldmapsonline

Integrate collections of historical Swiss maps into the open platform www.oldmapsonline.org. At least the two collections Rhyiner (UB Bern) and manuscript maps from Zentralbibliothek Zurich.

Pilot for georeferencing maps from a library (ZBZ).

Second goal: to load old maps into a georefencing system and create a competition for public.

For the hackathon maps and metadata will be integrated in the mentioned platform. At the moment the legal status of metadata from Swiss libraries is not yet clear and only a few maps are in public domain (collection Ryhiner at UB Bern).

Another goal is to create a register of historical maps from Swiss collections.

Data

-

www.zumbo.ch old maps from a private collection (Marcel Zumstein).

-

Sammlung Ryhiner (University Library Bern): published in public domain

-

Here is the webpage for the georeferencing competition: http://klokan.github.io/openglambern/

-

georeferencing tool (by klokan) with random map: http://zumbo.georeferencer.com/random

Team

-

Peter Pridal, Günter Hipler, Rudolf Mumenthaler

Links

-

Documentation: Google Doc of #glamhack

-

Blog or forum posts will follow…

-

Tools we used: http://project.oldmapsonline.org/contribute for the metadata scheme (spreadsheet);

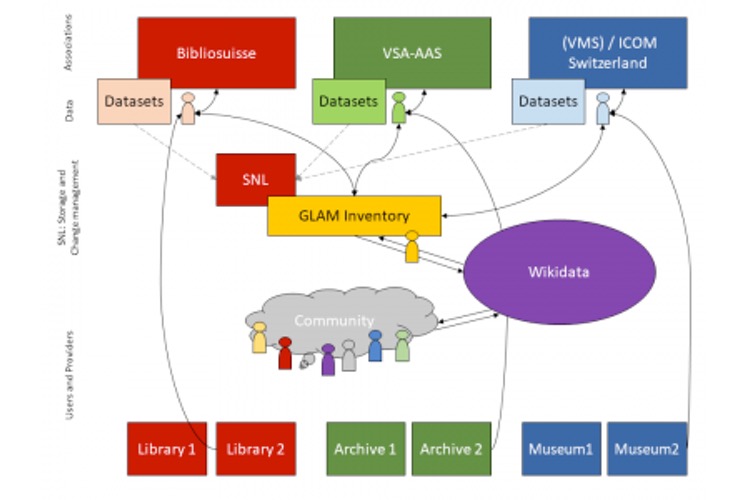

OpenGLAM Inventory

Gfuerst, CC by-sa 3.0, via Wikimedia Commons

Idea: Create a database containing all the heritage institutions in Switzerland. For each heritage institution, all collections are listed. For each collection the degree of digitization and the level of openness are indicated: metadata / content available in digital format? available online? available under an open license? etc. - The challenge is twofold: First, we need to find a technical solution that scales well over time and across national borders. Second, the processes to regularly collect data about the collections and their status need to be set up.

Step 1: Compilation of various existing GLAM databases (done)

National inventories of heritage institutions are created as part of the OpenGLAM Benchmark Survey; there is also a network of national contacts in a series of countries which have been involved in a common data collection exercise.

Step 2: GLAM inventory on Wikipedia (ongoing)

Port the GLAM inventory to Wikipedia, enrich it with links to existing Wikipedia articles: See

German Wikipedia|Project "Schweizer Gedächtnisinstitutionen in der Wikipedia".

Track the heritage institutions' presence in Wikipedia.

Encourage the Wikipedia community to write articles about institutions that haven't been covered it in Wikipedia.

Once all existing articles have been repertoried, the inventory can be transposed to Wikidata.

Further steps

-

Provide a basic inventory as a Linked Open Data Service

-

Create an inventory of collections and their accessibility status

Data

-

Swiss GLAM Inventory (Datahub)

Team

-

various people from the Wikipedia communtiy

-

and maybe you?

Picture This

Story

-

The Picture This “smart” frame shows police photographs of homeless people by Carl Durheim (1810-1890)

-

By looking at a picture, you trigger a face detection algorithm to analyse both, you and the homeless person

-

The algorithm detects gender, age and mood of the person on the portrait (not always right)

-

You, as a spectator, become part of the system / algorithm judging the homeless person

-

The person on the picture is at the mercy of the spectator, once again

How it works

-

Picture frame has a camera doing face detection for presence detection

-

Pictures have been pre-processed using a cloud service

-

Detection is still rather slow (should run faster on the Raspi 2)

-

Here's a little video https://www.flickr.com/photos/tamberg/16053255113/

Questions (not) answered

-

Who were those people? Why were they homeless? What was their crime?

-

How would we treat them? How will they / we be treated tomorrow? (by algorithms)

Data

Team

-

@ram00n

-

@tamberg

-

and you

Ideas/Iterations

-

Download the pictures to the Raspi and display one of them (warmup)

-

Slideshow and turning the images 90° to adapt to the screensize

-

Play around with potentiometer and Arduino to bring an analog input onto the Raspi (which only has digital I/O)

-

Connect everything and adapt the slideshow speed with the potentiometer

-

Display the name (extracted from the filename) below the picture

next steps, more ideas:

-

Use the Raspi Cam to detect a visitor in front of the frame and stop the slideshow

-

Use the Raspi Cam to take a picture of the face of the visitor

-

Detect faces in the camera picture

-

Detect faces in the images [DONE, manually, using online service]

-

…merge visitor and picture faces

Material

-

7inch TFT https://www.adafruit.com/products/947

-

Picture frame http://www.thingiverse.com/thing:702589

Software

-

http://www.raspberrypi.org/downloads/ - Raspbian

-

http://www.pygame.org - A game framework, allows easy display of images

-

https://bitbucket.org/tamberg/makeopendata/src/tip/2015/PictureThis - Scraper and Display

Links

-

http://www.raspberrypi.org/facial-recognition-opencv-on-the-camera-board/ - OpenCV on Raspi-Cam

-

https://speakerdeck.com/player/083e55006e4a013063711231381528f7 - Slide 106 for face replacement

Not used this time, but might be useful

-

https://thinkrpi.wordpress.com/2013/05/22/opencv-and-camera-board-csi/ - old Raspian versions did not have the latest OpenCV installed. Nice howto for that

-

https://realpython.com/blog/python/face-recognition-with-python/ - OpenCV, nice but not used

-

http://docs.opencv.org - not used directly, only via SimpleCV

-

http://makezine.com/projects/pi-face-treasure-box/ - maybe a nice weekend project

-

http://www.aliexpress.com/item/FreeShipping-Banana-Pro-Pi-7-inch-LVDS-LCD-Module-7-Touch-Screen-F-Raspberry-Pi-Car/32246029570.html - 7inch TFT mit LVDS Flex Connector

Portrait Id

(Original working title: Portrait Domain)

This is a concept for an gamified social media platform / art installation aiming to explore alternate identity, reflect on the usurping of privacy through facial recognition technology, and make use of historic digitized photographs in the Public Domain to recreate personas from the past. Since the #glamhack event where this project started, we have developed an offline installation which uses Augmented Reality to explore the portraits. See videos on Twitter or Instagram.

View the concept document for a full description.

Data

The exhibit gathers data on user interactions with historical portraits, which is combined with analytics from the web application on the Logentries platform:

Team

Launched by loleg at the hackdays, this project has already had over a dozen collaborators and advisors who have kindly shared time and expertise in support. Thank you all!

Please also see the closely related projects picturethis and graphing_the_stateless.

Links

Public Domain Game

A card game to discover the public domain. QR codes link physical cards with data and digitized works published online. This project was started at the 2015 Open Cultural Data Hackathon.

Sources

Team

-

Mario Purkathofer

-

Joël Vogt

-

Bruno Schlatter

Schweizer Kleinmeister: An Unexpected Journey

This project shows a large image collection in an interactive 3D-visualisation. About 2300 prints and drawings from “Schweizer Kleinmeister” from the Gugelmann Collection of the Swiss National Library form a cloud in the virtual space.

The images are grouped according to specific parameters that are automatically calculated by image analysis and based on metadata. The goal is to provide a fast and intuitive access to the entire collection, all at once. And this not accomplished by means of a simple list or slideshow, where items can only linearly be sorted along one axis like time or alphabet. Instead, many more dimensions are taken into account. These dimensions (22 for techniques, 300 for image features or even 2300 for descriptive text analysis) are then projected onto 3D space, while preserving topological neighborhoods in the original space.

The project renounces to come up with a rigid ontology and forcing the items to fit in premade categories. It rather sees clusters emerge from attributes contained in the images and texts themselves. Groupings can be derived but are not dictated.

The user can navigate through the cloud of images. Clicking on one of the images brings up more information about the selected artwork. For the mockup, three different non-linear groupings were prepared. The goal however is to make the clustering and selection dependent on questions posed by any individual user. A kind of personal exhibition is then curated, different for every spectator.

Open Data used

Gugelmann Collection, Swiss National Library

http://opendata.admin.ch/en/dataset/sammlung-gugelmann-schweizer-kleinmeister

http://commons.wikimedia.org/wiki/Category:CH-NB-Collection_Gugelmann

Team

-

Sonja Gasser ( @sonja_gasser)

Links

Spock Monroe Art Brut

(Click image for full-size download on DeviantArt)

This is a photo mosaic based on street art in SoHo, New York City - Spock\Monroe (CC BY 2.0) as photographed by Ludovic Bertron . The mosaic is created out of miniscule thumbnails (32 pixels wide/tall) of 9486 images from the Collection de l'Art Brut in Lausanne provided on http://musees.lausanne.ch/ using the Metapixel software running on Linux at the 1st Swiss Open Cultural Data Hackathon.

This is a humble tribute to Leonard Nimoy, who died at the time of our hackathon.

Data

Team

Swiss Games Showcase

A website made to show off the work of the growing Swiss computer game scene. The basis of the site is a crowdsourced list of Swiss games. This list is parsed, and additional information on each game is automatically gathered. Finally, a static showcase page is generated.

Data

-

Source List on Google Docs of Released and Playable Swiss Video Games

Team

The Endless Story

Data

Team

Links

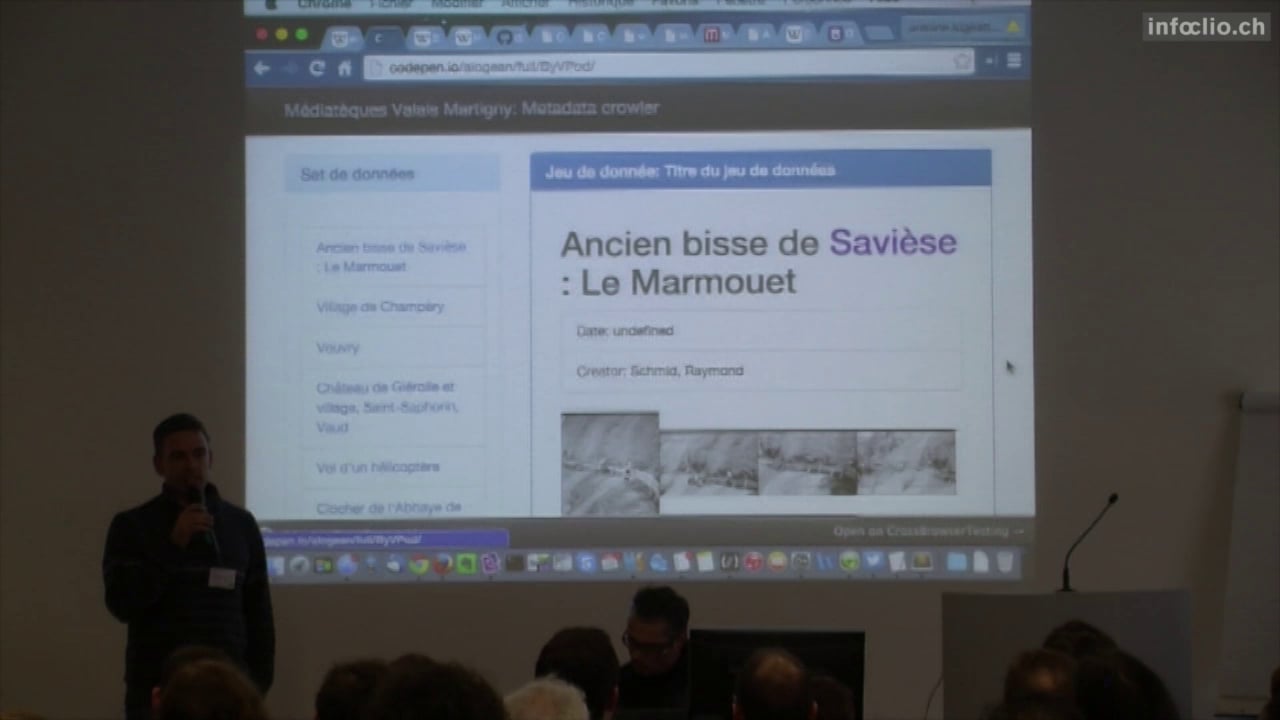

Thematizer

Problem:

There are a lot of cultural data (meta-data, texts, videos, photos) available to the community, in Open Data format or not, that are not valued and sleep in data silos.

These data could be used, non-exhaustively, in the areas of tourism (services or products creations highlighting the authenticity of the visited area) or in museums (creation of thematic visits based on visitors profiles)

Proposition:

We propose to work on an application able to request different local specialized cultural datasets and make links, through the result, with the huge, global, universal, Wikipedia and Google Map to enrich the cultural information returned to the visitor.

Prototype 1 (Friday):

One HTML page with a search text box and a button. It requests Wikipedia with the value, collect the JSON page’s content, parse the Table of Content in order to keep only level 1 headers, display the result in both vertical list and word cloud.

Prototype 2 (Saturday):

One HTML page accessing the dataset from the Mediathèque of Valais (http://xml.memovs.ch/oai/oai2.php?verb=ListIdentifiers&metadataPrefix=oai_dc&set=k), getting all the “qdc” XML pages and displaying them in a vertical list. When you click on one of those topics, on the right of the page you will get some information about the topic, one image (if existing) and a cloud of descriptions. Clicking on one of the descriptions will then request Wikipedia with that value and display the content.

If we have enough time we will also get a location tag from the Mediathèque XML file and then display a location on Google Map.

Demo

-

http://codepen.io/alogean/full/ByVPod/ (last version)

Resources (examples, similar applications, etc.):

This idea is based on a similar approach that has been developed during Museomix 2014 : http://www.museomix.org/prototypes/museochoix/ . We are trying to figure out how to extend this idea to other contexts than the museums and to other datasets than those proposed by that particular museum.

Data

Example of made requests:

Other potentially interesting datasets for future work:

Team

Links

-

https://twitter.com/mdammers?lang=fr . Link to the Wikidata expert who gave us some useful links to the Wikipedia API.

-

https://pad.okfn.org/p/Lausanne_Museums_GLAMhack . Pad with information about the Museris 3.0 initiative in Lausanne and access to their manual database of meta-data. (Not sure the links will work very long after the hackday…)

ViiSoo

Exploring a water visualisation and sonification remix with Open Data,

to make it accessible for people who don't care about data.

Why water? Water is a open accessible element for life. It

flows like data and everyone should have access to it.

We demand Open Access to data and water.

Join us, we have stickers.

Open Data Used

Tec / Libraries

-

HTML5, Client Side Javascript, CSS and Cowbells

Team

Created by the members of Kollektiv Zoll

ToDoes

-

Flavours (snow, lake, river)

-

Image presentation

-

Realtime Input and Processing of Data from an URL

See it live

Solothurner Kościuszko-Inventar

Webapp Eduard Spelterini

PreOCR

Animation in the spirit of dada poetry

The computer produces animations. Dada! All your artworks are belong to us. We build the parameters, you watch the animations. Words, images, collide together into ever changing, non-random, pseudo-random, deliberately unpredictable tensile moments of social media amusement. Yay!

For this first prototype we used Walter Serner’s Text “Letzte Lockerung – Manifest Dada” as a source. This text is consequently reconfigured, rewritten and thereby reinterpreted by the means of machine learning using “char-rnm”.

Images in the public domain snatched out of the collection “Wandervögel” from the Schweizerische Sozialarchiv.

Data

-

Images are taken from the collection Wandervögel from the Schweizerische Sozialarchiv

-

Text Walter Serner Letzte Lockerung - Manifest Dada

-

Tool char-rnn

Team

Performing Arts Ontology

The goal of the project is to develop an ontology for the performing arts domain that allows to describe the holdings of the Swiss Archives for the Performing Arts (formerly Swiss Theatre Collection and Swiss Dance Collection) and other performing arts related holdings, such as the holdings represented on the Performing Arts Portal of the Specialized Information Services for the Performing Arts (Goethe University Frankfurt).

See also: Project "Linked Open Theatre Data"

Data

-

Data from the www.performing-arts.eu Portal

Project Outputs

2017:

-

Draft Version of the Swiss Performing Arts Ontology (Pre-Release, 24 May 2017)

2016:

-

Initial Data Modelling Experiments: Swiss Performing Arts Vocabulary (deprecated)

Resources

Team

-

Christian Schneeberger

-

Birk Weiberg

-

René Vielgut (2016)

-

Julia Beck

-

Adrian Gschwend

Kamusi Project: Every Word in Every Language

We are developing many resources built around linguistic data, for languages around the world. At the Cultural Data hackathon, we are hoping for help with:

-

An application for translating museum exhibit information and signs in other public spaces (zoos, parks, etc) to multiple languages, so visitors can understand the exhibit no matter where they come from. We currently have a prototype for a similar application for restaurants.

-

Cultural datasets: we are looking for multilingual lexicons that we can incorporate into our db, to enhance the general vocabulary that we have for many languages.

-

UI design improvements. We have software, but it's not necessarily pretty. We need the eyes of designers to improve the user experience.

Data

-

List and link your actual and ideal data sources.

Team

Historical Dictionary of Switzerland Out of the Box

The Historical Dictionary of Switzerland (HDS) is an academic reference work which documents the most important topics and objects of Swiss history from prehistory up to the present.

The HDS digital edition comprises about 36.000 articles organized in 4 main headword groups:

- Biographies,

- Families,

- Geographical entities and

- Thematical contributions.

Beyond the encyclopaedic description of entities/concepts, each article contains references to primary and secondary sources which supported authors when writing articles.

Data

We have the following data:

* metadata information about HDS articles Historical Dictionary of Switzerland comprising:

-

bibliographic references of HDS articles

-

article titles

* Le Temps digital archive for the year 1914

Goals

Our projects revolve around linking the HDS to external data and aim at:

-

Entity linking towards HDS

The objective is to link named entity mentions discovered in historical Swiss newspapers to their correspondant HDS articles.

-

Exploring reference citation of HDS articles

The objective is to reconcile HDS bibliographic data contained in articles with SwissBib.

Named Entity Recognition

We used web-services to annotate text with named entities:

- Dandelion

- Alchemy

- OpenCalais

Named entity mentions (persons and places) are matched against entity labels of HDS entries and directly linked when only one HDS entry exists.

Further developments would includes:

- handling name variants, e.g. 'W.A. Mozart' or 'Mozart' should match 'Wolfgang Amadeus Mozart' .

- real disambiguation by comparing the newspaper article context with the HDS article context (a first simple similarity could be tf-idf based)

- working with a more refined NER output which comprises information about name components (first, middle,last names)

Bibliographic enrichment

We work on the list of references in all articles of the HDS, with three goals:

-

Finding all the sources which are cited in the HDS (several sources are cited multiple times) ;

-

Link all the sources with the SwissBib catalog, if possible ;

-

Interactively explore the citation network of the HDS.

The dataset comes from the HDS metadata. It contains lists of references in every HDS article:

Result of source disambiguation and look-up into SwissBib:

Bibliographic coupling network of the HDS articles (giant component). In Bibliographic coupling two articles are connected if they cite the same source at least once.

Biographies (white), Places (green), Families (blue) and Topics (red):

Ci-citation network of the HDS sources (giant component of degree > 15). In co-citation networks, two sources are connected if they are cited by one or more articles together.

Publications (white), Works of the subject of an article (green), Archival sources (cyan) and Critical editions (grey):

Team

- odi

- julochrobak

- and other team members

Visualize Relationships in Authority Datasets

Raw MACS data:

Transforming data.

Visualizing MACS data with Neo4j:

Visualization showing 300 respectively 1500 relationships:

Visualization showing 3000 relationships. For an exploration of the relations you find a high-res picture here graph_3000_relations.png (10.3MB)

Please show me the shortest path between “Rechtslehre” und “Ernährung”:

Some figures

-

original MACS dataset: 36.3MB

-

'wrangled' MACS dataset: 171MB

-

344134 nodes in the dataset

-

some of our laptops have difficulties to handle visualization of more than 4000 nodes :(

Datasets

-

MACS - Multilingual Access to Subjects http://www.dnb.de/DE/Wir/Kooperation/MACS/macs_node.html

-

GND - Gemeinsame Normdatei http://www.dnb.de/DE/Standardisierung/GND/gnd_node.html

Process

-

get data

-

transform data (e.g. with “Metafactor”)

-

load data in graph database (e.g. “Neo4j”)

*its not as easy as it sounds

Team

-

Günter Hipler

-

Silvia Witzig

-

Sebastian Schüpbach

-

Sonja Gasser

-

Jacqueline Martinelli

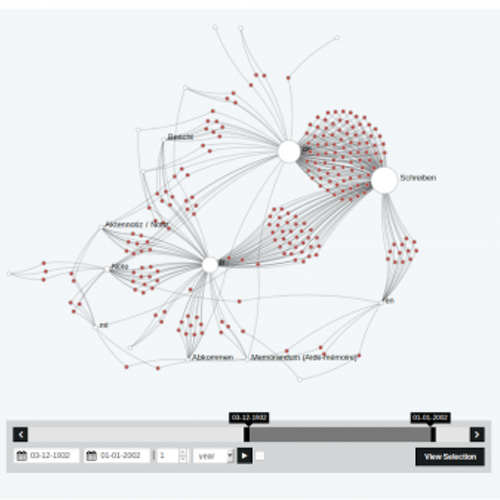

Dodis Goes Hackathon

Wir arbeiten mit den Daten zu den Dokumenten von 1848-1975 aus der Datenbank Dodis und nutzen hierfür Nodegoat.

Animation (mit click öffnen):

Data

Team

-

Christof Arnosti

-

Amandine Cabrio

-

Lena Heizmann

-

Christiane Sibille

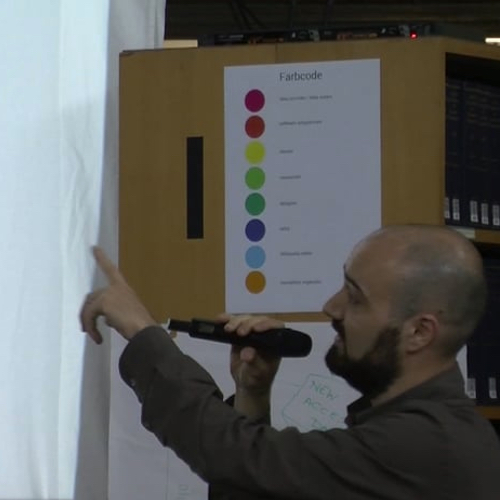

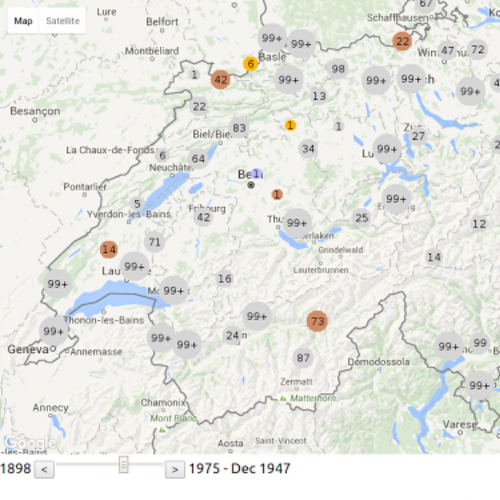

VSJF-Refugees Migration 1898-1975 in Switzerland

We developed an interactive visualization of the migration flow of (mostly jewish) refugees migrating to or through Switzerland between 1898-1975. We used the API of google maps to show the movement of about 20'000 refugees situated in 535 locations in Switzerland.

One of the major steps in the development of the visualization was to clean the data, as the migration route is given in an unstructured way. Further, we had to overcame technical challenges such as moving thousands of marks on a map all at once.

The journey of a refugee starts with the place of birth and continues with the place from where Switzerland was entered (if known). Then a series of stays within Switzerland is given. On average a refugee visited 1 to 2 camps or homes. Finally, the refugee leaves Switzerland from a designated place to a destination abroad. Due to missing information some of the dates had to be estimated, especially for the date of leave where only 60% have a date entry.

The movements of all refugees can be traced over time in the period of 1898-1975 (based on the entry date). The residences in Switzerland are of different types and range from poor conditions as in prison camps to good conditions as in recovery camps. We introduced a color code to display different groups of camps and homes: imprisoned (red), interned (orange), labour (brown), medical (green), minors (blue), general (grey), unknown (white).

As additional information, to give the dots on the map a face, we researched famous people who stayed in Switzerland in the same time period. Further, historical information were included to connect the movements of refugees to historical events.

Data

Code

Team

-

Bourdic Maïwenn

-

Noyer Frédéric

-

Tutav Yasemin

-

Züger Marlies

Manesse Gammon

The Codex Manesse, or «Große Heidelberger Liederhandschrift», is an outstanding source of Middle High German Minnesang, a book of songs and poetry the main body of which was written and illustrated between 1250 and 1300 in Zürich, Switzerland. The Codex, produced for the Manesse family, is one of the most beautifully illustrated German manuscripts in history.

The Codex Manesse is an anthology of the works of about 135 Minnesingers of the mid 12th to early 14th century. For each poet, a portrait is shown, followed by the text of their works. The entries are ordered by the social status of the poets, starting with the Holy Roman Emperor Heinrich VI, the kings Konrad IV and Wenzeslaus II, down through dukes, counts and knights, to the commoners.

Page 262v, entitled «Herr Gœli», is named after a member of the Golin family originating from Badenweiler, Germany. «Herr Gœli» may have been identified either as Konrad Golin (or his nephew Diethelm) who were high ranked clergymen in 13th century Basel. The illustration, which is followed by four songs, shows «Herrn Gœli» and a friend playing a game of Backgammon (at that time referred to as «Pasch», «Puff», «Tricktrack», or «Wurfzabel»). The game is in full swing, and the players argue about a specific move.

Join in and start playing a game of backgammon against «Herrn Gœli». But watch out: «Herr Gœli» speaks Middle High German, and despite his respectable age he is quite lucky with the dice!

Instructions

You control the white stones. The object of the game is to move all your pieces from the top-left corner of the board clockwise to the bottom-left corner and then off the board, while your opponent does the same in the opposite direction. Click on the dice to roll, click on a stone to select it, and again on a game space to move it. Each die result tells you how far you can move one piece, so if you roll a five and a three, you can move one piece five spaces, and another three spaces. Or, you can move the same piece three, then five spaces (or vice versa). Rolling doubles allows you to make four moves instead of two.

Note that you can't move to spaces occupied by two or more of your opponent's pieces, and a single piece without at least another ally is vulnerable to being captured. Therefore it's important to try to keep two or more of your pieces on a space at any time. The strategy comes from attempting to block or capture your opponent's pieces while advancing your own quickly enough to clear the board first.

And don't worry if you don't understand what «Herr Gœli» ist telling you in Middle High German: Point your mouse to his message to get a translation into modern English.

Updates

2016/07/01 v1.0: Index page, basic game engine

2016/07/02 v1.1: Translation into Middle High German, responsive design

2016/07/04 v1.11: Minor bug fixes

Data

-

Universitätsbibliothek Heidelberg: Der Codex Manesse und die Entdeckung der Liebe

-

Universitätsbibliothek Heidelberg: Große Heidelberger Liederhandschrift – Cod. Pal. germ. 848 (Codex Manesse)

-

Wikimedia Commons: Codex Manesse

-

Historisches Lexikon der Schweiz: Manessische Handschrift

-

Historisches Lexikon der Schweiz: Baden, von

-

Wörterbuchnetz: Mittelhochdeutsches Handwörterbuch von Matthias Lexer

Author

-

Prof. Thomas Weibel, Thomas Weibel Multi & Media

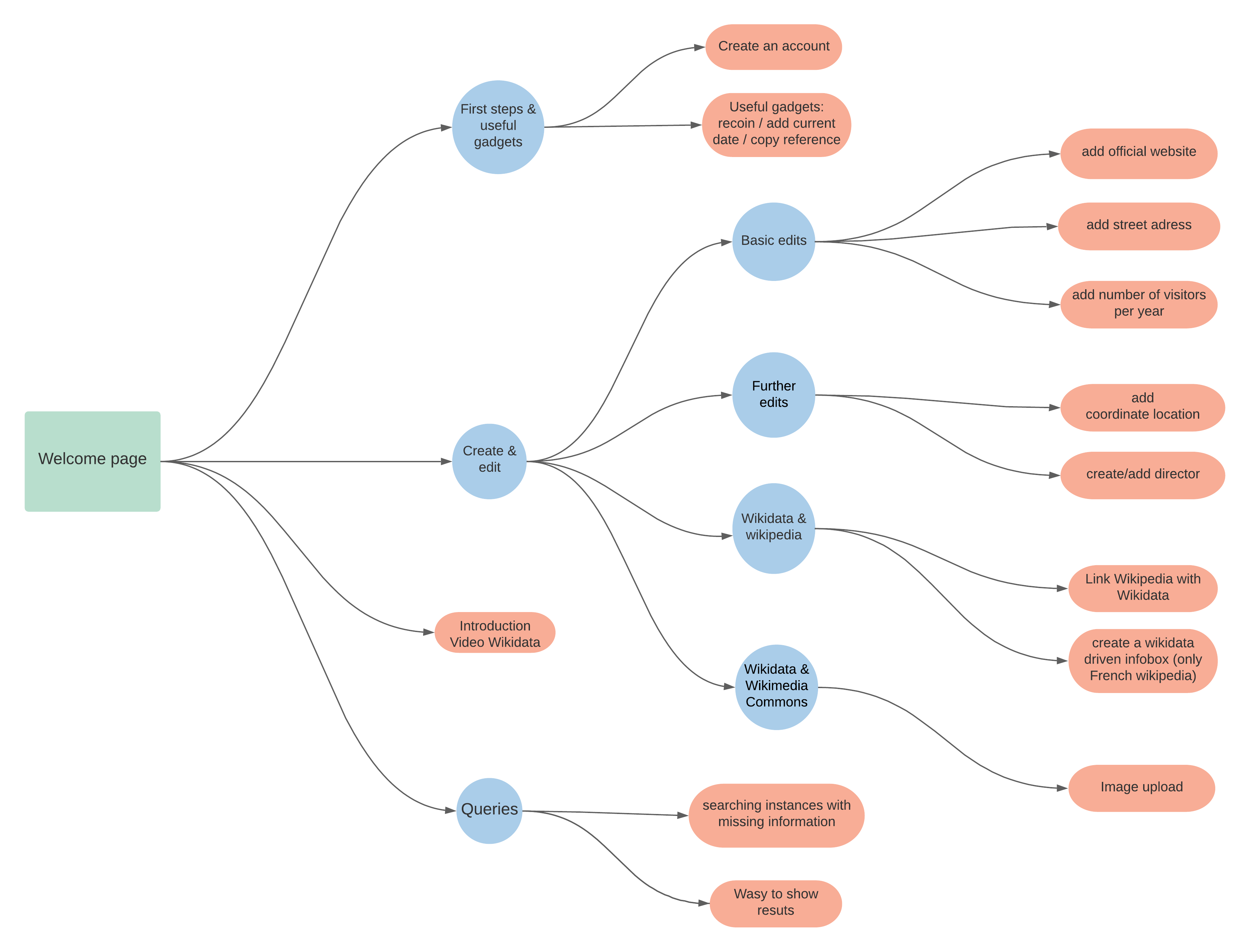

Historical maps

The group discussed the usage of historical maps and geodata using the Wikimedia environment. Out of the discussion we decided to work on 4 learning items and one small hack.

The learning items are:

-

The workflow for historical maps - the Wikimaps workflow

-

Wikidata 101

-

Public artworks database in Sweden - using Wikidata for storing the data

-

Mapping maps metadata to Wikidata: Transitioning from the map template to storing map metadata in Wikidata. Sum of all maps?

The small hack is:

-

Creating a 3D gaming environment in Cities Skylines based on data from a historical map.

Data

This hack is based on an experimentation to map a demolished and rebuilt part of Helsinki. In DHH16, a Digital Humanities hackathon in Helsinki this May, the goal was to create a historical street view. The source aerial image was georeferenced with Wikimaps Warper, traced with OpenHistoricalMap, historical maps from the Finna aggregator were georeferenced with the help of the Geosetter program and finally uploaded to Mapillary for the final street view experiment.

The Small Hack - Results

Our goal has been to recreate the historical area of Helsinki in a modern game engine provided by Cities: Skylines (Developer: Colossal Order). This game provides a built-in map editor which is able to read heightmaps (DEM) to rearrange the terrain according to it. Though there are some limits to it: The heightmap has to have an exact size of 1081x1081px in order to be able to be translated to the game's terrain.

To integrate streets and railways into the landscape, we tried to use an already existing modification for Cities: Skylines which can be found in the Steam Workshop: Cimtographer by emf. Given the coordinates of the bounding box for the terrain, it is supposed to read out the geometrical information of OpenStreetMap. A working solution would be amazing, as one would not only be able to read out information of OSM, but also from OpenHistoricalMap, thus being able to recreate historical places. Unfortunately, the algorithm is not working that properly - though we were able to create and document some amazing “street art”.

Another potential way of how to recreate the structure of the cities might be to create an aerial image overlay and redraw the streets and houses manually. Of course, this would mean an enormous manual work.

Further work can be done regarding the actual buildings. Cities: Skylines provides the opportunity to mod the original meshes and textures to bring in your very own structures. It might be possible to create historical buildings as well. However, one has to think about the proper resolution of this work. It might also be an interesting task to figure out how to create low resolution meshes out of historical images.

Team

-

Susanna Ånäs

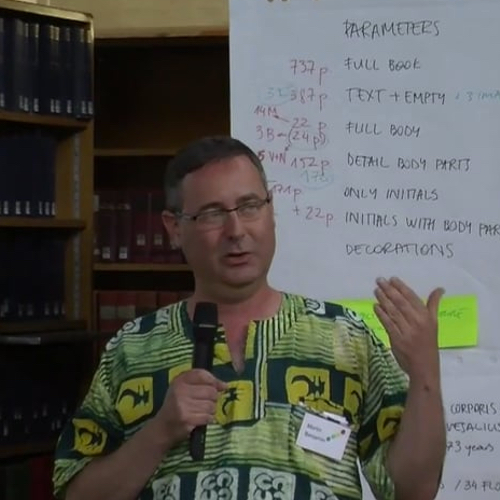

Visual Exploration of Vesalius' Fabrica

Screenshots of the prototype

Description

We are using cultural data of a rare copy of DE HUMANI CORPORIS FABRICA combined with a quantitive and qualitative analysis of visual content. This all is done without reading a single text page. That means that we are trying to create a content independent visual analysis method, which would enable a public to have an overview and quick insight.

Process

Clickable Prototype

Some Gifs

Data

Team

-

Radu Suciu

-

Nicole Lachenmeier, www.yaay.ch

-

Indre Grumbinaite, www.yaay.ch

-

Danilo Wanner, www.yaay.ch

-

Darjan Hil, www.yaay.ch

-

Vlad Atanasiu

Sprichort

sprichort is an application which lets users travel in time. The basis are a map and historical photographs like Spelterini's photographs from his voyages to Egypt, across the Alps or to Russia. To each historical photograph comes an image of what it looks like now. So people can see change. The photographs are complemented with stories from people about this places and literary descriptions of this places.

An important aspect is participation. Users should be able to upload their own hostorical photographs and they should be able to provide actual photos to historical photographs.

The User Interface of the application:

Web

Data

Team

-

Ricardo Joss

-

Daisy Larios

-

Jia Chyi, Wang

-

Sabrina Montimurro

SFA-Metadata (swiss federal state archives) at EEXCESS

The goal of our „hack“ is to reuse existing search and visualization tools for dedicated datasets provided by the swiss federal state archives.

The project EEXCESS (EU funded research project, www.eexcess.eu) has the vision to unfold the treasure of cultural, educational and scientific long-tail content for the benefit of all users. In this context the project realized different software components to connect databases, providing means for both automated (recommender engine) and active search queries and a bunch of visualization tools to access the results.

The federal swiss state archives hosts a huge variety of digitized objects. We aim at realizing a dedicated connection of that data with the EEXCESS infrastructure (using a google chrome extension) and thus find out, whether different ways of visualizations can support intuitive access to the data (e.g. Creation of virtual landscapes from socia-economic data, browse through historical photographs by using timelines or maps etc.).

Let's keep fingers crossed …![]()

Data

Team

-

Louis Gantner

-

Daniel Hess

-

Marco Majoleth

-

André Ourednik

-

Jörg Schlötterer

#GlamHackClip2016

Short clip edited to document the GLAMHack 2016, featuring short interviews with hackathon participants that we recorded on site and additional material from the Open Cultural Data Sets made available for the hackathon.

Data

Music - Public Domain Music Recordings and Metadata / Swiss Foundation Public Domain

- Amilcare Ponchielli (1834-1886), La Gioconda, Dance of the hours (part 2), recorded in Manchester, 29. Juli 1941

- Joseph Haydn, Trumpet Concerto in E Flat, recorded on the 19. Juni 1946

Aerial Photographs by Eduard Spelterini / Swiss National Library

- Eduard Spelterini, Basel between 1893 and 1923. See picture.

- Eduard Spelterini, Basel between 1893 and 1902. See picture.

Bilder 1945-1973 / Dodis

- 1945: Ankunft jüdischer Flüchtlinge

- 1945: Flüchtlinge an der Grenze

Team

-

Jan Baumann (infoclio.ch)

-

Enrico Natale (infoclio.ch)

Aufstieg und Niedergang des modernen Zeitregimes

Der Beitrag fragt nach dem Umgang mit Zeit, nach der Diskursivität von Zeit in der Geschichtsschreibung des 19. und der ersten Hälfte des 20. Jahrhunderts. Zeit als Wahrnehmungsmuster beeinflusst Modi und Ausformungen der Konstruktion von Erinnerung – von Geschichtsschreibung wie von anderen Erinnerungsmodi –, während letztere ihrerseits Zeitkonzeptionen und Deutungen von Zeit strukturieren und festschreiben. Es sollen Wege der Tiefenanalyse des Funktionierens von Erinnerungsdiskursen mit speziellem Blick auf die Geschichtsschreibung aufgezeigt werden, wobei ein dreifacher Fokus verfolgt wird: auf diskursive Dimensionen, welche Zeitwahrnehmung und Zeitdeutung zum Ausdruck bringen, auf deren Konstruktionslogiken sowie auf die Narrativität von Zeit, Geschichte und Gedächtnis.

Wireless telegraphy and synchronisation of time. A transnational perspective

This paper, based on unpublished documents of the International Telecommunication Union’s archive and radio amateurs' magazines, discusses the multiform relationships between wireless telegraphy and time at the beginning of the 20th century. Specifically, it aims to analyze how time synchronization and wireless telegraphy depend on and refer to each other, explicitly and implicitly.

First, wireless became an essential infrastructure for time synchronization, when in 1912 the International Time Conference inaugurated the network of signaling stations with the Eiffel tower. Second, radio time signals brought forward the development of wireless and became one of the first widely accepted forms of radio broadcasting. Finally, this relation between time and wireless later evolved in new unforeseen applications that led to the development of other technologies, such as aviation and seismology. In conclusion, wireless generated new ideas, affected different technological fields, and changed the perception of distance and time.

Collaborative Face Recognition and Picture Annotation for Archives

Le projet

Les Archives photographiques de la Société des Nations (SDN) — ancêtre de l'actuelle Organisation des Nations Unies (ONU) — consistent en une collection de plusieurs milliers de photographies des assemblées, délégations, commissions, secrétariats ainsi qu'une série de portraits de diplomates. Si ces photographies, numérisées en 2001, ont été rendues accessible sur le web par l'Université de l'Indiana, celles-ci sont dépourvues de métadonnées et sont difficilement exploitables pour la recherche.

Les Archives photographiques de la Société des Nations (SDN) — ancêtre de l'actuelle Organisation des Nations Unies (ONU) — consistent en une collection de plusieurs milliers de photographies des assemblées, délégations, commissions, secrétariats ainsi qu'une série de portraits de diplomates. Si ces photographies, numérisées en 2001, ont été rendues accessible sur le web par l'Université de l'Indiana, celles-ci sont dépourvues de métadonnées et sont difficilement exploitables pour la recherche.

Notre projet est de valoriser cette collection en créant une infrastructure qui soit capable de détecter les visages des individus présents sur les photographies, de les classer par similarité et d'offrir une interface qui permette aux historiens de valider leur identification et de leur ajouter des métadonnées.

Le projet s'est déroulé sur deux sessions de travail, en mai (Geneva Open Libraries) et en septembre 2017 (3rd Swiss Open Cultural Data Hackathon), séparées ci-dessous.

Session 2 (sept. 2017)

L'équipe

| Université de Lausanne | United Nations Archives | EPITECH Lyon | Université de Genève |

|---|---|---|---|

| Martin Grandjean | Blandine Blukacz-Louisfert | Gregoire Lodi | Samuel Freitas |

| Colin Wells | Louis Schneider | ||

| Adrien Bayles | |||

| Sifdine Haddou |

Compte-Rendu

Dans le cadre de la troisième édition du Swiss Open Cultural Data Hackathon, l’équipe qui s’était constituée lors du pre-event de Genève s’est retrouvée à l’Université de Lausanne les 15 et 16 septembre 2017 dans le but de réactualiser le projet et poursuivre son développement.

Vendredi 15 septembre 2017

Les discussions de la matinée se sont concentrées sur les stratégies de conception d’un système permettant de relier les images aux métadonnées, et de la pertinence des informations retenues et visibles directement depuis la plateforme. La question des droits reposant sur les photographies de la Société des Nations n’étant pas clairement résolue, il a été décidé de concevoir une plateforme pouvant servir plus largement à d’autres banques d’images de nature similaire.

Samedi 16 septembre 2017

Découverte : Wikimedia Commons dispose de son propre outil d'annotation : ImageAnnotator. Voir exemple ci-contre.

Découverte : Wikimedia Commons dispose de son propre outil d'annotation : ImageAnnotator. Voir exemple ci-contre.

Code

Organisation

https://github.com/PictureAnnotation

Repositories

https://github.com/PictureAnnotation/Annotation

https://github.com/PictureAnnotation/Annotation-API

Data

Images en ligne sur Wikimedia Commons avec identification basique pour tests :

A (1939) https://commons.wikimedia.org/wiki/File:League_of_Nations_Commission_075.tif

B (1924-1927) https://commons.wikimedia.org/wiki/File:League_of_Nations_Commission_067.tif

Session 1 (mai 2017)

L'équipe

| Université de Lausanne | United Nations Archives | EPITECH Lyon | Archives d'Etat de Genève |

|---|---|---|---|

| Martin Grandjean martin.grandjean@unil.ch | Blandine Blukacz-Louisfert bblukacz-louisfert@unog.ch | Adam Krim adam.krim@epitech.eu | Anouk Dunant Gonzenbach anouk.dunant-gonzenbach@etat.ge.ch |

| Colin Wells cwells@unog.ch | Louis Schneider louis.schneider@epitech.eu | ||

| Maria Jose Lloret mjlloret@unog.ch | Adrien Bayles adrien.bayles@epitech.eu | ||

| Paul Varé paul.vare@epitech.eu |

Ce projet fait partie du Geneva Open Libraries Hackathon.

Compte-Rendu

Vendredi 12 mai 2017

Lancement du hackathon Geneva Open Libraries à la Bibliothèque de l'ONU (présentation du week-end, pitch d'idées de projets, …)

Premières idées de projets:

- Site avec tags collaboratifs pour permettre l'identification des personnes sur des photos d'archives.

- Identification des personnages sur des photos d'archives de manière automatisée.

→ Identifier automatiquement toutes les photos où se situe la même personne et permettre l'édition manuelle de tags qui s'appliqueront sur toutes les photos du personnage (plus besoin d'identifier photo par photo les personnages photographiés).

Samedi 13 mai 2017

Idéalisation du projet: que peut-on faire de plus pour que le projet ne soit pas qu'un simple plugin d'identification ? Que peut-on apporter de novateur dans la recherche collaborative ? Que peut-on faire de plus que Wikipédia ?

Travailler sur la photo, la manière dont les données sont montrées à l'utilisateur, etc…

Problématique de notre projet: permettre une collaboration sur l'identification de photos d'archives avec une partie automatisée et une partie communautaire et manuelle.

Analyser les photos → Identifier les personnages → Afficher la photo sur un site avec tous les personnages marqués ainsi que tous les liens et notes en rapports.

Utilisateur → Création de tags sur la photographie (objets, scènes, liens historiques, etc..) → Approbation de la communauté de l'exactitude des tags proposés.

Travail en cours sur le P.O.C.:

- Front du site: partie graphique du site, survol des éléments…

- Prototype de reconnaissance faciale: quelques défauts à corriger, exportation des visages…

- Poster du projet

Dimanche 14 mai 2017

Le projet ayant été sélectionné pour représenter le hackathon Geneva Open Libraries lors de la cérémonie de clôture de l'Open Geneva Hackathons (un projet pour chacun des hackathons se tenant à Genève ce week-end), il est présenté sur la scène du Campus Biotech.

Data

Poster Genève - Mai 2017

Version PDF grande taille disponible ici. Version PNG pour le web ci-dessous.

Poster Lausanne - Sepembre 2017

Version PNG

Code

Jung - Rilke Correspondance Network

Joint project bringing together three separate projects: Rilke correspondance, Jung correspondance and ETH Library.

Objectives:

-

agree on a common metadata structure for correspondence datasets

-

clean and enrich the existing datasets

-

build a database that can can be used not just by these two projects but others as well, and that works well with visualisation software in order to see correspondance networks

-

experiment with existing visualization tools

Data

ACTUAL INPUT DATA

-

For Jung correspondance: https://opendata.swiss/dataset/c-g-jung-correspondence (three files)

-

For Rilke correspondance: https://opendata.swiss/en/dataset/handschriften-rainer-maria-rilke (two files, images and meta data)

Comment: The Rilke data is cleaner than the Jung data. Some cleaning needed to make them match:

1) separate sender and receiver; clean up and cluster (OpenRefine)

2) clean up dates and put in a format that IT developpers need (Perl)

3) clean up placenames and match to geolocators (Dariah-DE)

4) match senders and receivers to Wikidata where possible (Openrefine, problem with volume)

METADATA STRUCTURE

The follwing fields were included in the common basic data structure:

sysID; callNo; titel; sender; senderID; recipient; recipientID; place; placeLat; placeLong; datefrom, dateto; language

DATA CLEANSING AND ENRICHMENT

* Description of steps, and issues, in Process (please correct and refine).

Issues with the Jung correspondence is data structure. Sender and recipient in one column.

Also dates need both cleaning for consistency (e.g. removal of “ca.”) and transformation to meet developper specs. (Basil using Perl scripts)

For geocoding the placenames: OpenRefine was used for the normalization of the placenames and DARIAH GeoBrowser for the actual geocoding (there were some issues with handling large files). Tests with OpenRefine in combination with Open Street View were done as well.

The C.G. Jung dataset contains sending locations information for 16,619 out of 32,127 letters; 10,271 places were georeferenced. In the Rilke dataset all the sending location were georeferenced.

For matching senders and recipients to Wikidata Q-codes, OpenRefine was used. Issues encountered with large files and with recovering Q codes after successful matching, as well as need of scholarly expertise to ID people without clear identification. Specialist knowledge needed. Wikidata Q codes that Openrefine linked to seem to have disappeared? Instructions on how to add the Q codes are here https://github.com/OpenRefine/OpenRefine/wiki/reconciliation.

Doing this all at once poses some project management challenges, since several people may be working on same files to clean different data. Need to integrate all files.

DATA after cleaning:

https://github.com/basimar/hackathon17_jungrilke

DATABASE

Issues with the target database:

Fields defined, SQL databases and visuablisation program being evaluated.

How - and whether - to integrate with WIkidata still not clear.

Issues: letters are too detailed to be imported as Wikidata items, although it looks like the senders and recipients have the notability and networks to make it worthwhile. Trying to keep options open.

As IT guys are building the database to be used with the visualization tool, data is being cleaned and Q codes are being extracted.

They took the cleaned CVS files, converted to SQL, then JSON.

Additional issues encountered:

- Visualization: three tools are being tested: 1) Paladio (Stanford) concerns about limits on large files? 2) Viseyes and 3) Gephi.

- Ensuring that the files from different projects respect same structure in final, cleaned-up versions.

Visualization (examples)

Heatmap of Rainer Maria Rilke’s correspondence (visualized with Google Fusion Tables)

Correspondence from and to C. G. Jung visualized as a network. The two large nodes are Carl Gustav Jung (below) and his secretary’s office (above). Visualized with the tool Gephi

Team

-

Flor Méchain (Wikimedia CH): working on cleaning and matching with Wikidata Q codes using OpenRefine.

-

Lena Heizman (Dodis / histHub): Mentoring with OpenRefine.

-

Hugo Martin

-

Samantha Weiss

-

Michael Gasser (Archives, ETH Library): provider of the dataset C. G. Jung correspondence

-

Irina Schubert

-

Sylvie Béguelin

-

Basil Marti

-

Jérome Zbinden

-

Deborah Kyburz

-

Paul Varé

-

Laurel Zuckerman

-

Christiane Sibille (Dodis / histHub)

-

Adrien Zemma

-

Dominik Sievi wdparis2017

Schauspielhaus Zürich performances in Wikidata

The goal of the project is to try to ingest all performances of the Schauspielhaus Theater in Zurich held between 1938 and 1968 in Wikidata. In a further step, data from the www.performing-arts.eu Platform, Swiss Theatre Collection and other data providers could be ingested as well.

Data

Tools

-

OpenRefine to clean and reconcile data with wikidata

-

Wikidata Quick Statements to add new items to wikidata

-

Wikidata Quick Statements 2 Beta to add new items to wikidata in batch mode

References

Methodology

-

load data in OpenRefine

-

Column after column (starting with the easier ones) :

-

reconcile against wikidata

-

manually match entries that matched multiple entries in wikidata

-

find out what items are missing in wikidata

-

load them in wikidata using quick statements (quick statements 2 allow you to retrieve the Q numbers of the newly created items)

-

reconcile again in OpenRefine

-

Results

-

Code to transform csv into quick statements : https://github.com/j4lib/performing-arts/blob/master/create_quick_statements.php

Screenshots

Raw Data

Reconcile in OpenRefine

Choose corresponding type

Manually match multiple matches

Import in Wikidata with quick statements

-

Len : english label

-

P31 : instance of

-

Q5 : human

-

P106 : occupation

-

Q1323191 : costume designer

Step 3 (you can get the Q number from there)

Team

-

Renate Albrecher

-

Julia Beck

-

Flor Méchain

-

Beat Estermann

-

Birk Weiberg

-

Lionel Walter

Swiss Video Game Directory

This projects aims at providing a directory of Swiss Video Games and metadata about them.

The directory is a platform to display and promote Swiss Games for publishers, journalists, politicians or potential buyers, as well as a database aimed at the game developers community and scientific researchers.

Our work is the continuation of a project initiated at the 1st Open Data Hackathon in 2015 in Bern by David Stark.

Output

Workflow

-

An open spreadsheet contains the data with around 300 entries describing the games.

-

Every once in a while, data are exported into the Directory website (not publicly available yet).

-

At any moment, game devs or volunteer editors can edit the spreadsheet and add games or correct informations.

Data

The list was created on Aug. 11 2014 by David Javet, game designer and PhD student at UNIL. He then opened it to the Swiss game dev community which collaboratively fed it. At the start of this hackathon, the list was composed of 241 games, starting from 2004. It was turned into an open data set on the opendata.swiss portal by Oleg Lavrovsky.

Source

Team

-

Karine Delvert

-

Selim Krichane

-

Isaac Pante

-

David Stark (team leader)

More

Big Data Analytics (bibliographical data)

We try to analyse bibliographical data using big data technology (flink, elasticsearch, metafacture).

Here a first sketch of what we're aiming at:

Datasets

We use bibliographical metadata:

Swissbib bibliographical data https://www.swissbib.ch/

-

Catalog of all the Swiss University Libraries, the Swiss National Library, etc.

-

960 Libraries / 23 repositories (Bibliotheksverbunde)

-

ca. 30 Mio records

-

MARC21 XML Format

-

→ raw data stored in Mongo DB

-

→ transformed and clustered data stored in CBS (central library system)

-

Institutional Repository der Universität Basel (Dokumentenserver, Open Access Publications)

-

ca. 50'000 records

-

JSON File

crossref https://www.crossref.org/

-

Digital Object Identifier (DOI) Registration Agency

-

ca. 90 Mio records (we only use 30 Mio)

-

JSON scraped from API

Use Cases

Swissbib

Librarian:

- For prioritizing which of our holdings should be digitized most urgently, I want to know which of our holdings are nowhere else to be found.

- We would like to have a list of all the DVDs in swissbib.

- What is special about the holdings of some library/institution? Profile?

Data analyst:

- I want to get to know better my data. And be faster.

→ e.g. I want to know which records don‘t have any entry for ‚year of publication‘. I want to analyze, if these records should be sent through the merging process of CBS. Therefore I also want to know, if these records contain other ‚relevant‘ fields, defined by CBS (e.g. ISBN, etc.). To analyze the results, a visualization tool might be useful.

edoc

Goal: Enrichment. I want to add missing identifiers (e.g. DOIs, ORCID, funder IDs) to the edoc dataset.

→ Match the two datasets by author and title

→ Quality of the matches? (score)

Tools

elasticsearch https://www.elastic.co/de/

JAVA based search engine, results exported in JSON

Flink https://flink.apache.org/

open-source stream processing framework

Metafacture https://culturegraph.github.io/,

https://github.com/dataramblers/hackathon17/wiki#metafacture

Tool suite for metadata-processing and transformation

Zeppelin https://zeppelin.apache.org/

Visualisation of the results

How to get there

Usecase 1: Swissbib

Usecase 2: edoc

Links

Data Ramblers Project Wiki https://github.com/dataramblers/hackathon17/wiki

Team

-

Data Ramblers https://github.com/dataramblers

-

Dominique Blaser

-

Jean-Baptiste Genicot

-

Günter Hipler

-

Jacqueline Martinelli

-

Rémy Meja

-

Andrea Notroff

-

Sebastian Schüpbach

-

T

-

Silvia Witzig

Medical History Collection

Finding connections and pathways between book and object collections of the University Institute for History of Medecine and Public Heatlh (Institute of Humanities in Medicine since 2018) of the CHUV.

The project started off with data sets concerning two collections: book collection and object collection, both held by the University Institute for History of Medicine and Public Health in Lausanne. They were metadata of the book collection, and metadata plus photographs of object collection. These collections are inventoried in two different databases, the first one accessible online for patrons and the other not.

The idea was therefore to find a way to offer a glimpse into the objet collection to a broad audience as well as to highlight the areas of convergence between the two collections and thus to enhance the patrimony held by our institution.

Juxtaposing the library classification and the list of categories used to describe the object collection, we have established a table of concordance. The table allowed us to find corresponding sets of items and to develop a prototype of a tool that allows presenting them conjointly: https://medicalhistorycollection.github.io/glam2017/.

Finally, we’ve seized the opportunity and uploaded photographs of about 100 objects on Wikimedia: https://commons.wikimedia.org/wiki/Category:Institut_universitaire_d%27histoire_de_la_m%C3%A9decine_et_de_la_sant%C3%A9_publique.

Data

https://github.com/MedicalHistoryCollection/glam2017/tree/master/data

Team

-

Magdalena Czartoryjska Meier

-

Rae Knowler

-

Arturo Sanchez

-

Roxane Fuschetto

-

Radu Suciu

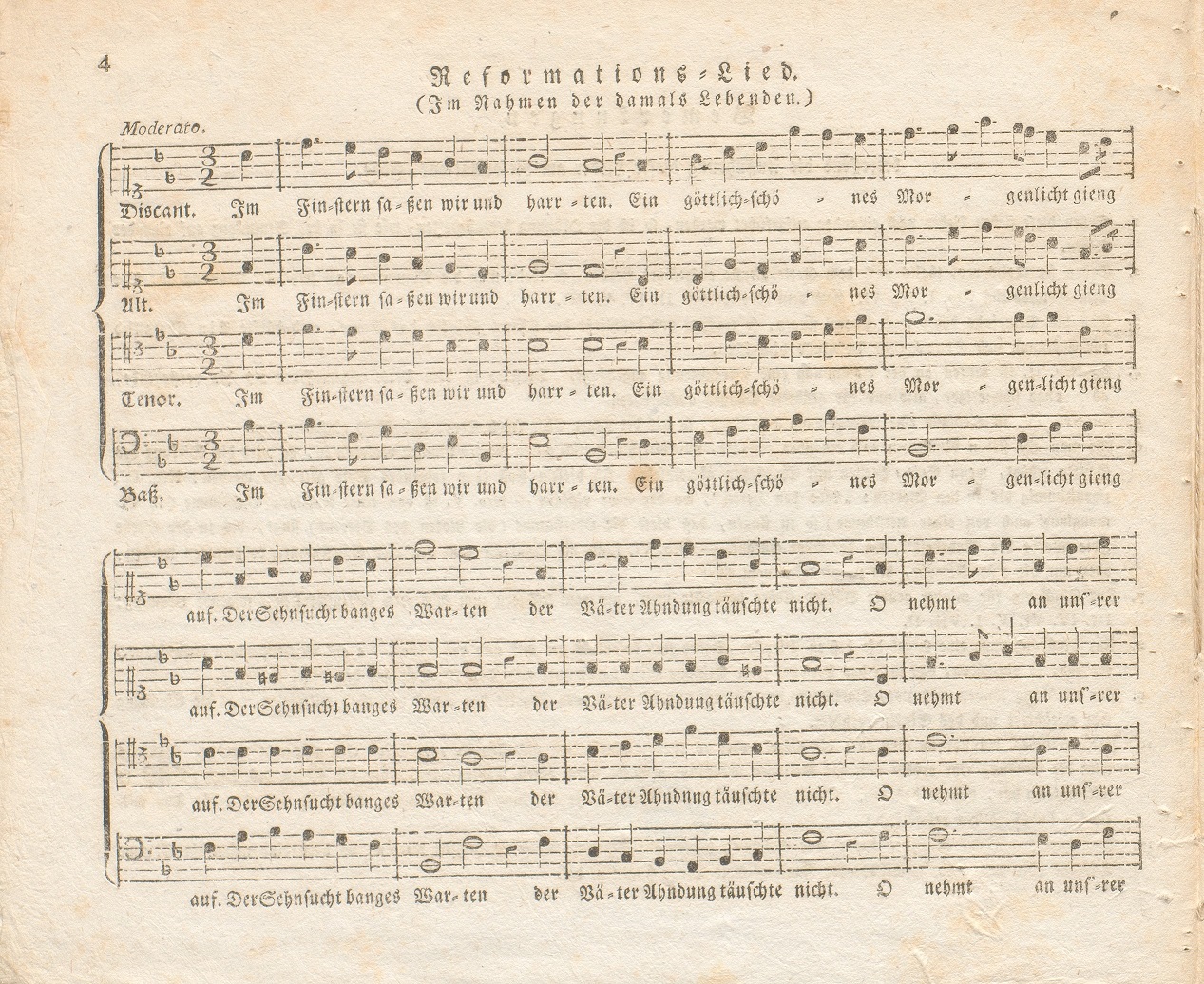

Old-catholic Church Switzerland Historical Collection

Christkatholische Landeskirche der Schweiz: historische Dokumente

Description

Der sog. “Kulturkampf” (1870-1886) (Auseinandersetzung zwischen dem modernen liberalen Rechtsstaat und der römisch-katholischen Kirche, die die verfassungsmässig garantierte Glaubens- und Gewissensfreiheit so nicht akzeptieren wollte) wurde in der Schweiz besonders heftig ausgefochten.

Ausgewählte Dokumente in den Archiven der christkatholischen Landeskirche bilden diese interessante Phase zwischen 1870 und 1886/1900 exemplarisch ab. Als lokale Fallstudie (eine Kirchgemeinde wechselt von der römisch-katholischen zur christkatholischen Konfession) werden in der Kollektion die Protokolle der Kirchgemeinde Aarau (1868-1890) gemeinfrei publiziert (CC-BY Lizenz). Dazu werden die digitalisierten Zeitschriften seit 1873 aus der Westschweiz publiziert. Die entsprechenden Dokumente wurden von den Archivträgern (Eigner) zur gemeinfreien Nutzung offiziell genehmigt und freigegeben. Allfällige Urheberrechte sind abgelaufen (70 Jahre) mit Ausnahme von wenigen kirchlichen Zeitschriften, die aber sowieso Öffentlichkeitscharakter haben.

Zielpublikum sind Historiker und Theologen sowie andere Interessierte aus Bildungsinstitutionen. Diese OpenData Kollektion soll andere christkatholische Gemeinden ermutigen weitere Quellen aus der Zeit des Kulturkampfes zu digitalisieren und zugänglich zu machen.

Overview

Bestände deutsche Schweiz :

• Kirchgemeinde Aarau

-

Protokolle Kirchgemeinderat 1868-1890

-

Monographie (1900) : Xaver Fischer : Abriss der Geschichte der katholischen (christkatholischen) Kirchgemeinde Aarau 1806-1895

Fonds Suisse Romande:

• Journaux 1873-2016

-

Le Vieux-Catholique 1873

-

Le Catholique-Suisse 1873-1875

-

Le Catholique National 1876-1878

-

Le Libéral 1878-1879

-

La Fraternité 1883-1884

-

Le Catholique National 1891-1908

-

Le Sillon de Genève 1909-1910

-

Le Sillon 1911-1970

-

Présence 1971-2016

• Canton de Neuchâtel

-

Le Buis 1932-2016

• Paroisse Catholique-Chrétienne de Genève: St.Germain (not yet published)

-

Répertoire des archives (1874-1960)

-

Conseil Supérieur - Arrêtés - 16 mai 1874 au 3 septembre 1875

-

Conseil Supérieur Président - Correspondence - 2 janv 1875 - 9 sept 1876

The data will be hosted on christkatholisch.ch; the publication date will be communicated. Prior to this the entry (national register) on opendata.swiss must be available and approved.

Data

* Présence (2007-2016): http://www.catholique-chretien.ch/publica/archives.php

Team

Swiss Social Archives - Wikidata entity match

Match linked persons of the media database of the Swiss Social Archives with Wikidata.

Data

-

Metadata of the media database of the Swiss Social Archives

Team

Hacking Gutenberg: A Moveable Type Game

The internet and the world wide web are often referred to as being disruptive. In fact, every new technology has a disruptive potential. 550 years ago the invention of the modern printing technology by Johannes Gutenberg in Germany (and, two decades later, by William Caxton in England) was massively disruptive. Books, carefully bound manuscripts written and copied by scribes during weeks, if not months, could suddenly be mass-produced at an incredible speed. As such the invention of moveable types, along with other basic book printing technologies, had a huge impact on science and society.

And yet, 15th century typographers were not only businessmen, they were artists as well. Early printing fonts reflect their artistic past rather than their industrial potential. The font design of 15th century types is quite obviously based on their handwritten predecessors. A new book, although produced by means of a new technology, was meant to be what books had been for centuries: precious documents, often decorated with magnificent illustrations. (Incunables – books printed before 1500 – often show a blank square in the upper left corner of a page so that illustrators could manually add artful initials after the printing process.)

Memory, also known as Match or Pairs, is a simple concentration game. Gutenberg Memory is an HTML 5 adaptation of the common tile-matching game. It can be played online and works on any device with a web browser. By concentrating on the tiles in order to remember their position the player is forced to focus on 15th (or early 16th) century typography and thus will discover the ageless elegance of the ancient letters.

Gutenberg Memory, Screenshot

Johannes Gutenberg: Biblia latina, part 2, fol. 36

Gutenberg Memory comes with 40 cards (hence 20 pairs) of syllables or letter combinations. The letters are taken from high resolution scans (>800 dpi) of late medieval book pages digitized by the university library of Basel. Given the original game with its 52 cards (26 pairs), Gutenberg Memory has been slightly simplified. Nevertheless it is rather hard to play as the player's visual orientation is constrained by the cards' typographic resemblance.

In addition, the background canvas shows a geographical map of Europe visualizing the place of printing. Basic bibliographic informations are given in the caption below, including a link to the original scan.

Instructions

Click on the cards in order to turn them face up. If two of them are identical, they will remain open, otherwise they will turn face down again. A counter indicates the number of moves you have made so far. You win the game as soon as you have successfully found all the pairs. Clicking (or tapping) on the congratulations banner, the close button or the restart button in the upper right corner will reshuffle the game and proceed to a different font, the origin of which will be displayed underneath.

Updates

2017/09/15 v1.0: Prototype, basic game engine (5 fonts)

2017/09/16 v2.0: Background visualization (place of printing)

2017/09/19 v2.1: Minor fixes

Data

-

Wikimedia Commons, 42-zeilige Gutenbergbibel, Teil 2, Blatt 36, Mainz, 1454-1455

-

Wikimedia Commons, 42-zeilige Gutenbergbibel, Teil 2, Blatt 35, Detail

-

Wikimedia Commons, Adam Petri, Martin Luther, Das Alte Testament deutsch, Basel, 1523-1525

-

Wikimedia Commons, Aldus Manutius, Horatius Flaccus, Opera, Venedig, 1501

-

Wikimedia Commons, Johannes Amerbach, Johannes Marius Philelphus, Epistolarium novum, Basel, 1486

-

Wikimedia Commons, Hieronymus Andreae, Albrecht Dürer, Underweysung der messung mit dem zirckel, Nürnberg 1525

Team

Elias Kreyenbühl (left), Thomas Weibel at the «Génopode» building on the University of Lausanne campus.

-

Prof. Thomas Weibel, Thomas Weibel Multi & Media, University of Applied Sciences Chur

-

Dr. des. Elias Kreyenbühl, University Library of Basel

OpenGuesser

This is a game about guessing and learning about geography through images and maps, made with Swisstopo's online maps of Switzerland. For several years this game was developed as open source, part of a series of GeoAdmin StoryMaps: you can try the original SwissGuesser game here, based on a dataset from the Swiss Federal Archives now hosted at Opendata.swiss.

The new version puts your orienteering of Swiss museums to the test.

Demo: OpenGuesser Demo

Encouraged by an excellent Wikidata workshop (slides) at #GLAMhack 2017, we are testing a new dataset of Swiss museums, their locations and photos, obtained via the Wikidata Linked Data endpoint (see app/data/*.sparql in the source). Visit this Wikidata Query for a preview of the first 10 results. This opens the possibility of connecting other sources, such as datasets tagged 'glam' on Opendata.swiss, and creating more custom games based on this engine.

We play-tested, revisited the data, then forked and started a refresh of the project. All libraries were updated, and we got rid of the old data loading mechanism, with the goal of connecting (later in real time) to open data sources. A bunch of improvement ideas are already proposed, and we would be glad to see more ideas and any contributions: please raise an Issue or Pull Request on GitHub if you're so inclined!

Data

-

GeoAdmin API via OpenLayers

Team

-

@pa_fonta

Wikidata Ontology Explorer

A small tool to get a quick feeling of an ontology on Wikidata.

Data

(None, but hopefully this helps you do stuff with your data :) )

Team

Dario Donati (Swiss National Museum) & Beat Estermann (Opendata.ch)

New Frontiers in Graph Queries

We begin with the observation that a typical SPARQL endpoint is not friendly in the eyes of an average user. Typical users of cultural databases include researchers in the humanities, museum professionals and the general public. Few of these people have any coding experience and few would feel comfortable translating their questions into a SPARQL query.

Moreover, the majority of the users expect searches of online collections to take something like the form of a regular Google search (names, a few words, or at the top end Boolean operators). This approach to search does not make use of the full potential of the graph-type databases that typically make SPARQL endpoints available. It simply does not occur to an average user to ask the database a query of the type “show me all book authors whose children or grandchildren were artists.”

The extensive possibilities that are offered by graph databases to researchers in the humanities go unexplored because of a lack of awareness of their capabilities and a shortage of information about how to exploit them. Even those academics who understand the potential of these resources and have some experience in using them, it is often difficult to get an overview of the semantics of complex datasets.

We therefore set out to develop a tool that:

-

simplifies the entry point of a SPARQL query into a form that is accessible to any user

-

opens ways to increase the awareness of users about the possibilities for querying graph databases

-

moves away from purely text-based searches to interfaces that are more visual

-

gives an overview to a user of what kinds of nodes and relations are available in a database

-

makes it possible to explore the data in a graphical way

-

makes it possible to formulate fundamentally new questions

-

makes it possible to work with the data in new ways

-

can eventually be applied to any SPARQL endpoint

Data

Wikidata

Team

-

Loïc Jaouen loic.jaouen@unil.ch

-

(Elena Chestnova elena.chestnova@usi.ch)

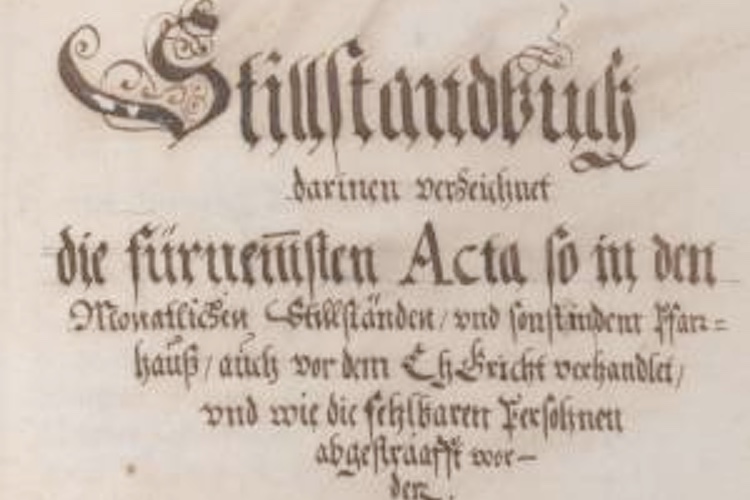

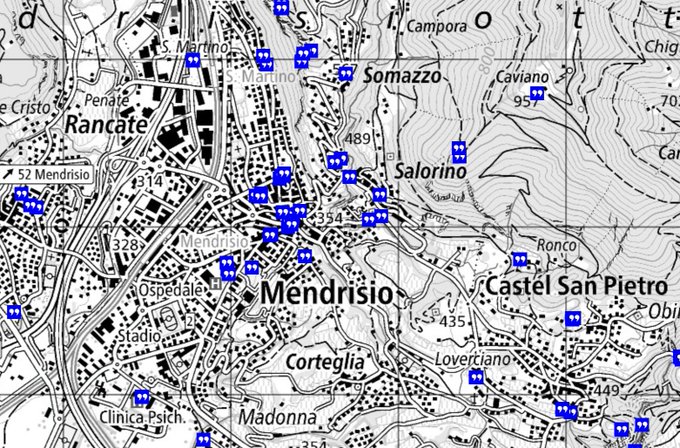

Sex and Crime und Kneippenschlägereien in Early Modern Zurich

Minutes reported by pastor in Early Modern Zurich.

Make the “Stillstandsprotokolle” searchable, georeferenced and browsable and display them on a map.

For more Info see our Github Repository

Access the documents: archives-quickaccess.ch/search/stazh/stpzh

Data

-

Primary Data

-

Secondary data

-

Siedlungsverzeichnis des Kantons Zürich: http://www.web.statistik.zh.ch/cms_siedlungsverzeichnis/daten.php

-

Team

-

Ernst Rosser, ernst.rosser@gmail.com

-

Tobias Hodel, tobias.hodel@ji.zh.ch

-

Barbara Leimgruber, Barbara.Leimgruber@ji.zh.ch

-

Rebekka Plüss, Rebekka.Pluess@ji.zh.ch

-

Ismail Prada, ismail.prada@gmail.com

-

Matthias Mazenauer, matthias.mazenauer@statistik.ji.zh.ch

Wikidata-based multilingual library search

In Switzerland each linguistic region is working with different authority files for authors and organizations, situation which brings difficulties for the end user when he is doing a search.

Goal of the Hackathon: work on a innovative solution as the library landscape search platforms will change in next years.

Possible solution: Multilingual Entity File which links to GND, BnF, ICCU Authority files and Wikidata to bring end user information about authors in the language he wants.

Steps:

-

analyse coverage by wikidata of the RERO authority file (20-30%)

-

testing approach to load some RERO authorities in wikidata (learn process)

-

create an intermediate process using GND ID to get description information and wikidata ID

-

from wikidata get the others identifiers (BnF, RERO,etc)

-

analyse which element from the GND are in wikidata, same for BnF, VIAF and ICCU

-

create a multilingual search prototype (based on Swissbib model)

Data

Number of ID in VIAF

-

BNF: 4847978

-

GND: 8922043

-

RERO: 255779

Number of ID in Wikidata from

-

BNF: 432273

-

GND: 693381

-

RERO: 2145

-

ICCU: 30047

-

VIAF: 1319031 (many duplicates: a WD-entity can have more than one VIAF ID)

Query model:

wikidata item with rero id

#All items with a property

# Sample to query all values of a property